Edits:

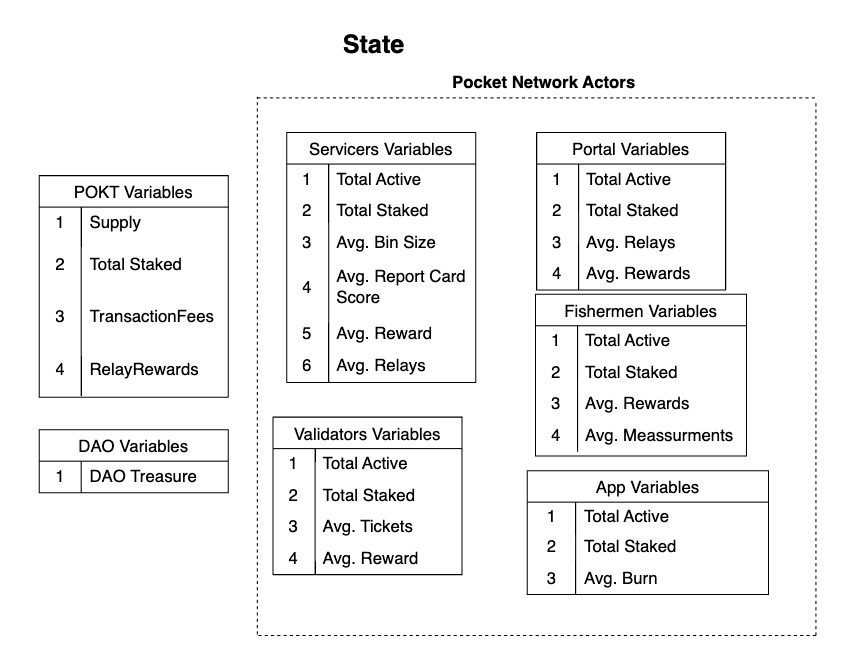

2023-03-20 : After some feedback it was decided to change the focus of the model from V0 to V1. The updated model can be found in the 11th post of this thread.

The idea of this thread is to start a discussion on how the initial model of $POKT economics should be built. This model is expected to be created following the Ethereum economic model using the radCAD library. For anyone who wants to jump-in in the discussion, I advise to take a look at the cadCAD masterclass, to understand the main parts of the model.

The structures to be presented are only a proposal and subject to changes through discussion. This initial proposal is based on the calls leaded by @profish and with the participation of @msa6867 and @Caesar . For those already familiar with the calls (that were recorded but I-m not sure if they were distributed), you will notice that I made some changes that we can keep on discussing here.

The project, if it is appealing for the community will be open-source and provide an easy to use UI for non-technical people.

The Simple Model

I have outlined some parts of the model but other are still missing, like the metrics.

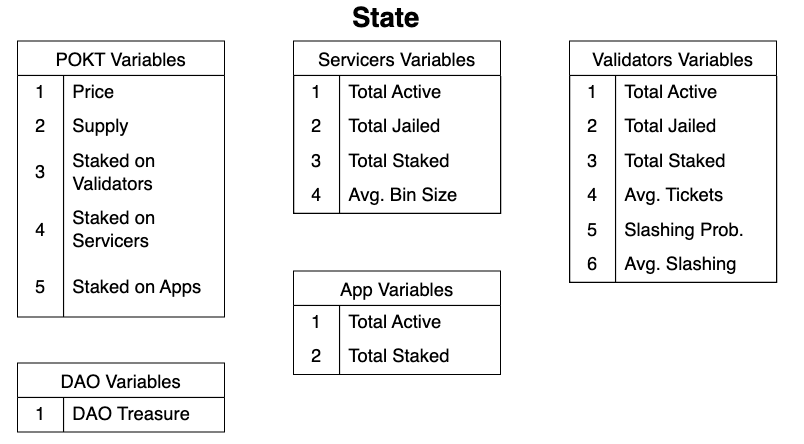

State Variables

These are the main variables that will be modified though cycling of the model, I have moved some to the Parameters sections because it seemed to be things that could be modified by stochastic processes (like the avg. DAO expending, or the avg. number of relays per session). I also divided the total staked $POKT into three categories since each has its own meaning.

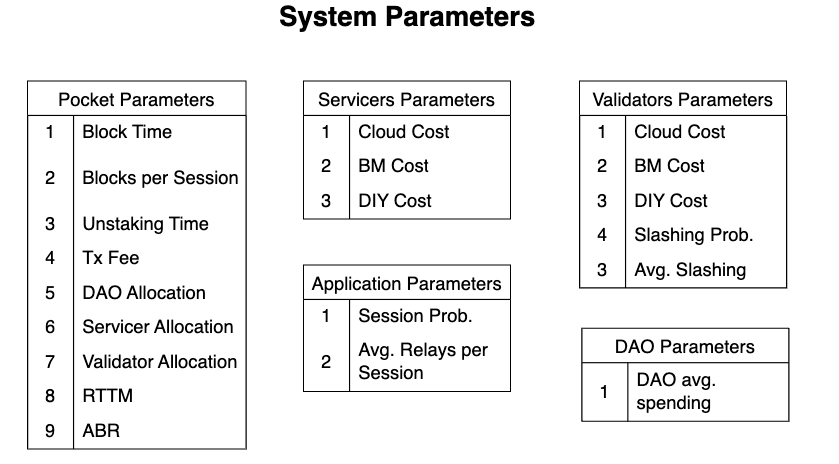

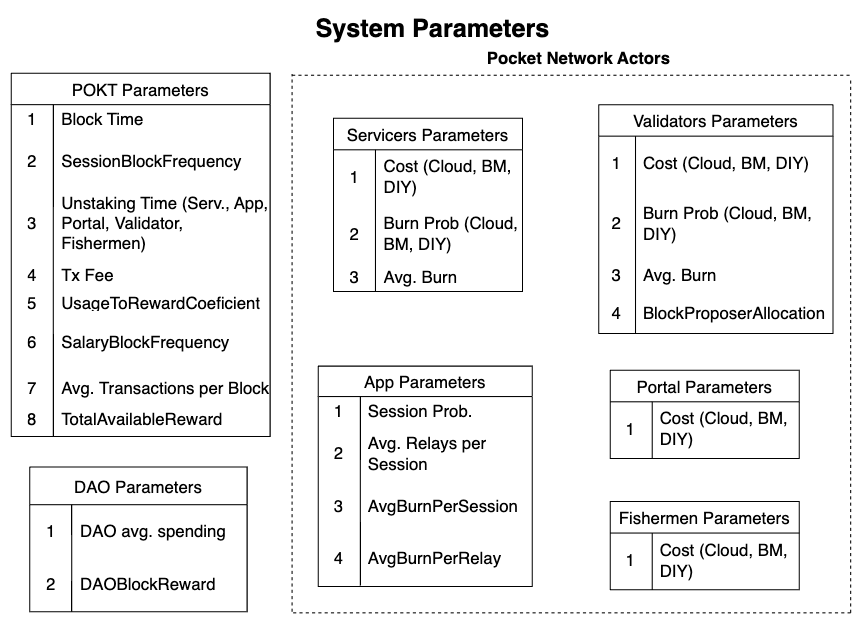

System Parameters

These are the simulation parameters, some of these we want to explore their effects, such as the RTTM or the ABR, some others are more fixed (like Block Time). I tried include here all parameters that should control minting and inflation.

Metrics

TODO: I have not created any specific metric, these should be calculated using the state variables and parameters. Metrics are interesting numbers to track that have no role in the evolution of the system, like inflation or node-runner profits.

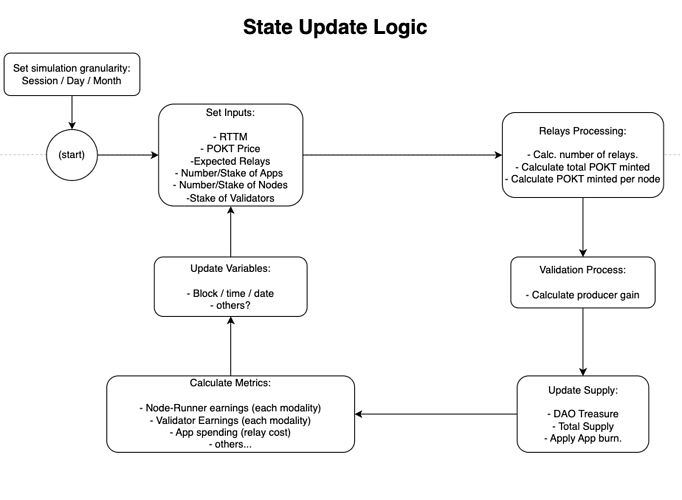

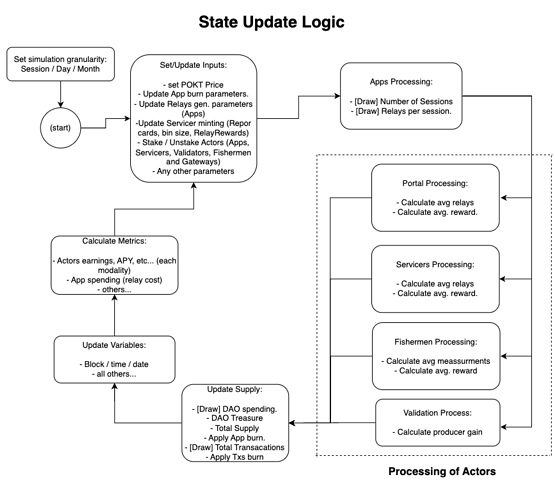

State Update Logic

This is a basic loop of the state update logic that should provide information on supply growth. I think that we can avoid the Cherry Picker logic at this point and deliver a prototype that has the most simple metrics, such as inflation and (avg.) profitability. Also note that there is no chain or regional logic embedded, this is intended to be a macro view of the Pocket Network, then we can start build down into specifics.

Steps:

-

The simulation should start by setting granularity that should be able to go as low as a single session. Block granularity makes no sense in Pocket economics.

-

Set Inputs: Here the main parameters are modified before any update takes place. Things like RTTM or $POKT price is updated according to sweeps and app/node/validator growth is applied.

-

Relays Processing: This is simply the calculation of a random number of relays to be processed, based on the current parameters. The total minting is calculated using the avg. bin of the network.

-

Validation Proces: Mostly a place holder, since there is no node-deep analysis, the validation process makes nothing but assigning minted tokens by validators.

-

Update Supply: Here the total supply change is calculated, the total $POKT generated, how much is locked by the DAO (10%), how much goes to the total supply (90% = servicers + validators) and how much is burnt (if there is app burning). Also DAO spending will be here, as newly created $POKT.

-

Calculate Metrics: Here all the metrics are produced,the operations here will depend on the metrics that will be defined.

-

Update Variables: Time step, block number and variables.

-

Loop…

The expected behavior of this model is to produce information on the supply given certain conditions of the Pocket Network, such as relay growth, node growth, RTTM planning, $POKT price etc. The model should be fit to produce the expected inflation, the profitability of different node-runners set-ups, the total network net earning (minted value - network cost) and so on. These are just a few I can think of.

So, what do we want?

This is a very rough model, nothing is coded, everything is possible and all of the above can be changed.

The idea is to discuss in the open how far the model should go and what should it include before making any line of code. The idea is to be clear on what can we expect to obtain in a given time frame.

Once we agree on some basic stuff and structures that should be included in a first version we can start to code or estimate how much effort would this modeling cost.

To be clear, this model is not only math and diagrams, the idea will be to produce an interactive model for the community, yes a UI hosted somewhere (hopefully).

So we would need to know which are the levers that you would like to pull, like being able to set a range of variation on the RTTM or the $POKT price over time. Anyone can ask for something, but keep in mind that models that are too flexible end up being too complex, so the “friendly” version should be kept simple.