I would be absolutely thrilled if we could get stake-weighted sessions back on the table. I think dev-wise it is completely doable, and worth the spend, given a year or so bf v1. I believe we can do it without touching either the session-selection code or the cherry-picker code

I completely agree with this ![]() The amount of time and effort having to go into accounting for all the changes is hurting the community and project IMO. If the community can’t understand basic rewards, and only technical insiders truely understand the nuances, this creates natural blocker for more folks getting involved in POKT and in node running.

The amount of time and effort having to go into accounting for all the changes is hurting the community and project IMO. If the community can’t understand basic rewards, and only technical insiders truely understand the nuances, this creates natural blocker for more folks getting involved in POKT and in node running.

My reservations regarding weighted-stake mechanisms (whether PIP-22 or something like Good Vibes) was it was going to make POKT significantly more complex and make the economics unapproachable. That has indeed happened and as @poktblade mentioned, the technical debt is already significant, and there is still a lot of work to do to iron this out.

Sooo… Instead of further complicated by neutralizing PIP-22 for doing weighted stake via weighted session selection (as both @poktblade and @msa6867 have suggested), why not just up the minimum stake to 30k and get our economics back where everyone can track and understand?

Wouldn’t this require unstaking?

Yes, but the whole network would have progressively unstake, just like when all nodes were at 15k. Upping the min effects everyone (except for the 481 nodes that are at 30k… they don’t have to unstake). The network is basically in the same position we were in prior to PIP-22 where upping the min to 30k would effect all nodes equally. If nodes today staked at 30k… there would still be less than 22k nodes, which means we still won’t have significant chain bloat (like we were with 45k+ nodes in the summer), and be back to classic POKT economic.

If team technical efforts were instead put toward the lite client (which most of the network is running on already), then those 22k nodes could theoretically have the resource footprint of a few thousand nodes. PIP-22 was originally proposed as a way to reduce the network’s cost, but the lite client can do it more from my understanding.

I’m NOT convinced weighted-stake thus far has been a net positive for POKT because of the divide it is creating. From my understanding, most nodes are on a lite client anyways. Though PIP-22 has had good effects in addressing chain bloat, it has not produced the desired economic effect on the token… which was the driving reason to embrace node consolidation. PIP-25 is now trying to patch 2nd order issues that came with PIP-22, which would significantly complicate our economics even more by huge proportions.

What is the reason we should NOT pursue upping the min to 30k, since it will effect most all nodes evenly??

what are your thoughts of straight up changing min stake to 30k w no change to pip-22 parameters? No one at 30, 45 or 60k would need to unstake and those at 15k would at most have to unstake half (or add new) to get up to 30, so between summer and now raising minstake, no group is subjected to two episodes of unstaking? (The much tighter clustering of node stakes causes any “second order effect” to all but dissapear).

Or why not just move it to 60k?

From a system perspective it makes sense, but at what cost? That is what I do not know. Who gets driven from the ecosystem who cannot or will not consolidate? If it is just laggard misconfigured nodes that have been all but forgotten, then great… but what if we end up throwing out quality diversity that is desirable for network health and robustness. Those are the questions I’d like to see answered before passing a motion to raise minstake. I would not presume that the groups most affected by a decision to raise minstake are well represented or vocal in the various social media channels, so we as a community ought to be proactive in doing discovery in this area if we are going to seriously explore that avenue.

If we do this, then we’re going to lead to mass unstaking again, and de-consolidating the network, except for those folks trying to be validators, at which point I believe we might need to increase the validator percentage of the relay-pie again, therefore creating varying incentives at the edges (15k, and 60k+).

I can’t pretend I know what the solution is here, but it feels like we released (or canary-released) Stake-Weighting, Sustainable Stairway, FREN, and LeanPOKT all so close to each other that we’re not able to take into account the affects of each change in isolation.

However, with that being said, changing the minimum stake to 30k, or 45k, or 60k, seems like we’re just kicking the can down the road, and penalizing non-whales or long-term holders, which would have two effects:

- Bad PR for the project

- Force those who still are interested to unstake and move their smaller stakes to the few providers that support 0-node-minimum staking with revshare (e.g., poktpool, sendnodes, tPOKT, c0d3r), which would further centralize the network amongst larger providers.

I reiterate that I don’t have a solution here, but I am just expressing a few personal views regarding some of the potential solutions mentioned here.

I also do agree with @poktblade that our economics are hard to follow. I hold advanced degrees in physics and even my head is spinning with some of the stuff we’re doing here. While I appreciate the work that @Andy-Liquify, @msa6867, and @adam have done over the course of the year to get inflation and consolidation sorted out, I do think we need to figure out something more tangible, seeing as two of the larger node-providers here (POKTScan, C0D3R) are in support of this proposal.

hmmm. its a nice soundbite, but is it true? sustainable stairway is completely orthogonal to the rest and hits the demand side not the supply side. While it is rather unfortunate that FREN hit at same time as stake-weighting, it is rather trivial to apply an appropriate discount to adjust for FREN lowering the RTTM. Please refer back to slides 6-8 of the slide deck posted above for how to adjust for various factors (including RTTM) that have changed since June. And see slide 9-10 to see actuals for september for comparison.

But point taken re LC and stake weight hitting at the same time.

Both LC and PIP-22 are v0 solutions to provide infra cost relief in the interim between now and v1 release. Both go away in V1. Note, however that it was determined quite a while ago by the powers that be(long before Andy introduced PIP-22) that stake-weighted rewards will be permanent feature of V1 (not in the form of PIP-22 but via a completely different mechanism). Is it really therefore out of line or too complicated for node runners to factor in how vertically vs horizontally they want to deploy their POKT since they are going to have to do it in V1 anyway?

I realize this is veering off topic of the technical concerns poktscan has raised. But that raises the main comment/question I guess I would have for poktscan: WAGMI proposal changed rewards structure by over a factor of 3. FREN changed reward structure by another factor of 2. LC and PIP-22 both sought to provide factor of 2 or more cost savings to the network and both seem to pretty much be achieving that. By contrast, from July until present poktscan has invested how many man-months now contending over what - a potential ± 10% effect that may meander a bit as noderunners upgrade their QoS but by and large will average over time pretty close to zero. Is all that effort really worth it? At what point is it making a mountain out of a molehile? Further, as @BenVan pointed out a couple times all the way back in July, it seems the main concern keeps coming back to the cherry picker. Has it ever occurred to poktscan to unleash the tremendous amount of analytical talent you have on optimizing the cherry picker rather than being single-focused on lowering the exponent?

just wanted to give a shout-out here to @cryptocorn here for spearheading FREN…

Hmm, is this true.? Should the DAO feel obligated to take an action just to satisfy a large node runner? Even if the action is to skew rewards in their own favor? The problem I have with the Cod3r comment is that it is not even addressing the technical, second-order, ±10% effect that PUP-25 is all about… by their own admission they “have not done the math.” The quotation you pull from is an excellent argument against raising minstake but is almost completely non-sequitur as relates to the current state of the system of PIP-22 with PUP-21 parameters. Lets take a look:

“A well-functioning, robust network needs to be diversified.” True. As it is today with the current parameter settings; no need to change exponent value in order to achieve what the network already has.

“Today, the only economically sensible option is 60k min stake.” False. A quick look at poktscan explorer will reveal 21k nodes who have found various economically sensible reasons not to stake to 60k

"Why can’t we reward smaller nodes adequately so that we can have more diversity. " False Premise. Please refer again to slide 10 to see that smaller nodes are pretty identically rewarded today to what the reward would currently been (after WAGMI, FREN etc) if PIP-22 never passed. If Cod3r feels rewards are not adequate, there beef is with FREN / emission control measures, not with PIP-22, and a completely separate proposal should be put forward to change the way RTTM is set so as to raise emissions.

“15k nodes have their advantages (such as lower barrier to entry to the network, diversifying assets among node runners, easier time to sell and buy them over OTC, which helps with liquidity). Curbing variety is ultimately not good for the network.” True, true, and true. And all completely non-sequitur as relates to PIP-22/PUP-21 (but are good discussion points re any discussion to raise minStake.)

Thanks for the quick response. Will answer in turn.

-

You’re right, it is a nice soundbite and you’re right, that Sustainable Stairway is orthogonal. I typed this up late very last night, so my faculties were not 100% their sharpest.

-

Re @Cryptocorn - Thanks for giving credit where credit is due.

-

Should the DAO feel obligated to satisfy a large node runner? No, absolutely not. HOWEVER, I believe the majority of DAO votes are node runners for either themselves or for businesses they built on top of the protocol, so even if I don’t think we should optimize for them, they do have a large portion of the vote (if memory serves me right) and may inevitably skew the DAO to vote one way (or may have already).

As for this soundbite of yours:

“Today, the only economically sensible option is 60k min stake.” False. A quick look at poktscan explorer will reveal 21k nodes who have found various economically sensible reasons not to stake to 60k

I am someone who still has many 15k nodes because the places I have them hosted are not charging me any differently for having, for example, four 15k nodes vs one 60k node, so I never had to unstake and incur a 21 day penalty, so I chose not to. So this assumption of yours isn’t completely true, at least not in my case, at least with respect to 15k vs. 60.

Moving on, I believe you have rightly deduced that the issue may not be PIP-21/PUP-21, but with the other proposals, and with your final quote about retaining 15k nodes - I agree here wholeheartedly.

I think what I’m trying to get to here, albeit poorly, is what @poktblade and @shane said in plain-english:

I completely agree with this

The amount of time and effort having to go into accounting for all the changes is hurting the community and project IMO. If the community can’t understand basic rewards, and only technical insiders truely understand the nuances, this creates natural blocker for more folks getting involved in POKT and in node running.

This, at the end of the day, may be a documentation/information-dissemination issue more than anything else, and what we’re doing here is going back and forth with MSA on one side and POKTScan on the other. This might be a good place for PNF to outsource the creation and documentation of all of these changes, including explainers on how all these changes work, pre-post, and how they work in combination for each other.

I agree that this discussion has extended and is hard to say that is really useful for the community.

I was hoping to get hard feedback on the analysis that we provided but I ended discussing over the same simplified (and flawed) model.

- No challenging point was raised against the metrics that prove that the fairness issue exist.

- No new supporting arguments were presented to support the linear model. Specifically, arguments that can reconcile the current values and the metrics observed in the report.

- The only analyzed point was the one that was the QoS inhomogeneities in the different stake bins (section 4.4). This was not the central issue that we raised and it was not solved due to PIP-22 mechanics.

I really was expecting you to read the long version.

You should not claim to be unbiased, we could do the same as our reports were done in good will. You should refrain to disclose your relations to the past proposals and your inversions. The community will then decide whether there is bias or not.

I feel that our discussions are going nowhere, you are focusing on the wrong topic and disregarding most of the analysis that we made. Once again you are labeling the issues we rise as “secondary effects”.

I totally understand your position. Our argument is technical and is a follow-up from previous technical issues that we raised during the PIP-22 voting phase. Sadly some topics cannot be simplified without making big mistakes (thats precisely what we think that happened with linear weighting).

I’ve never been a fan of PIP-22, my intention with this proposal was to correct the unfairness that we observe without changing the rules once again or changing the status-quo as little as possible.

In POKTscan we had received lots of question around PIP-22 and created lots of metrics to address the new network landscape. It was not easy to keep the numbers true and the community happy, we are still going through iterations on how to show the numbers to better serve our users. Sadly I cannot say that after a month of PIP-22 the community really gets even the simple stuff behind it (leaving aside our in-depht analysis). This is not a judgment on the community but on ourselves and the (perhaps unecesary) complexity we are pushing into the system.

If you ask me, personally, I would have been more comfortable with rising the minimum. Back in PIP-22 discussion times that was my main concern, was PIP-22 a twisted way to up the minimum? they told me that it was not. Sadly these were all talks outside the forum (a lesson learnt here).

I think that the answer is un-popularity of the measure. But it will be fair in the end. Up the stake only changes the entry level and then keeps things fair and simple.

We have analyzed the Cherry Picker in depth. You might like the CP or not, it might not be perfect, but it is working and is not making things that it was not intended to do. At least not in sensible amounts.

We went even further and modeled the whole thing using a statistical model. We backed the model against real life sessions and found little deviation. I can say that the Cherry Picker is working as it was designed. If we do not unleash our holy wrath upon the CP is because we have not found a reason to do it. Maybe it is because we don’t have enough documentation? cant say. As @ArtSabintsev pointed out, it is hard to find documentation of the changes and the features. Even PIP-22 / PUP-21 documentation is lacking.

We focus on the PIP-22 because:

- It changed the rules for those who did not wanted to enter the consolidation game.

- It is only rising the min-stake slowly and bleeding non-consolidated nodes in the meantime.

- We warned the community that this could happen during the PIP-22 voting phase. Sadly our voice was not loud enough.

- We talked to the PIP-22 proponents before the voting phase to warn them about this issue.

- We accepted the proponent’s concern that our arguments were based on simulations and waited to have data before returning to the subject.

- Opinions based on data are essential for building a solid project.

What does that accomplish? My entire argument is that having weighted stake with variables that can be tweaked to show preferential treatment towards a weight class creates class wars and makes POKT significantly more complex to understand.

How is nuking 15k nodes going to address either of those concerns? Nuking just one of the node classes to the benefit of the other classes is literally the opposite of what I’m suggesting.

If you want to argue that all nodes should be required to be in the 60k bucket, that in principle is what I’m suggesting… vs having tension between different weight classes. At 60k, PIP-22 would be naturalized anyways… because all nodes would be in the same bucket (which is the way it should be). We can debate on what the min stake should be, but 60k seems ridiculous when we can accomplish the intended effect of PIP-22 with 30k and the lite client.

What “can” are we kicking down the road with upping the minimum stake? I’m not tracking your meaning.

Regarding your effects… Bad PR is already upon us with the tension in the community around which node class should be in what way. Trying to “balance” the classes, because of 2nd order effects of PIP-22, are why we are here today.

The argument of centralization can be said about any changes to the network. Chang our economics so that everyone has to rely on the authors to understand what’s happening, results in much “centralizing” in POKT.

BTW, POKTscan and c0d3r are the only network monitoring tools folks have, and they can’t even decide on what the network average is per node. Having an economic model which can’t be easily understood will drive centralization of control to technical insiders who have data or data skills that the rest of the ecosystem doesn’t have.

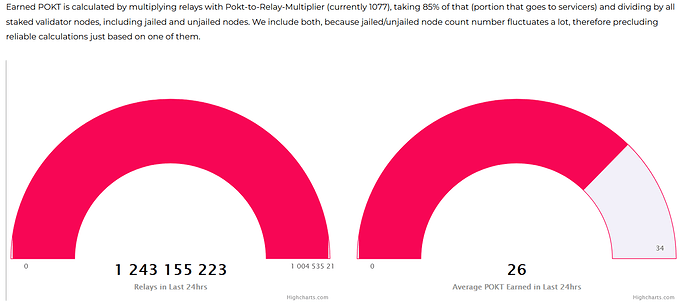

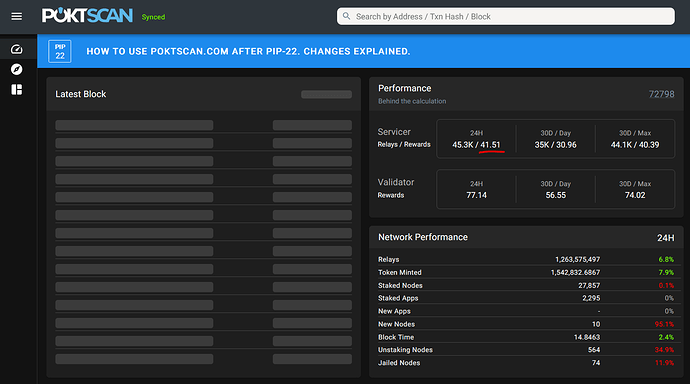

c0d3r:

POKTscan:

That is where we are today. Long time POKT participants and core-contributors can’t follow our current economics without getting confused at the results… so that will lead to centralization where those with the resources and teams to figure these nuances out, will take the business regardless. That is far more centralizing in my mind then fairly requiring ALL to be in the same economic bucket, where all node runners are on the same team and treated equally.

This is getting pretty thick man.

Every single aspect of your stance has been challenged and successfully debunked.

PIP-21 works exactly as designed and is in no way “unfair”.

No matter how many times you repeat your claim or how many pages you add to your PDF you have not “proven” a single thing. Your data set is insufficient (in size), inappropriate (in scope) and your interpretation is biased.

There is only one source of unfairness in the network and it is intentional. The Cherry Picker rewards high QOS nodes more than low QOS nodes. The fact that your “metrics” can’t even confirm that simple fact is a further expression of its inadequacy.

What? no “new” arguments? How about the “old” (and still obvious) argument? The code rewards the 15, 30, 45 and 60k bins EXACTLY fairly. Daily and weekly bin analyses by provider shows this clearly.

I don’t know if this is ego (fallen in love with your conclusion) or if this is agenda (PoktScan has almost exclusively 15k nodes) but whatever the reason this proposal seeks to punish those who have already taken a 21 day income loss in order to support the network and force us to take another 21 day loss in order to get into the 15k bucket so that we can get fair treatment.

This proposal should be withdrawn.

100% agree with every word said by Benvan above.

Only direction ServicerStakeFloorMultiplierExponent should be changed is to >1 to encourage further consolidation as was originally intended.

Thank you for your answers. Please don’t read by my asking the questions that I support either of those positions behing my questions… I used the questions to flesh out the thought process behind your original comment. I am taking very seriously and soberly the concerns you and @poktblade have raised regarding tech debt.

@ArtSabintsev asks very good questions regarding what then should be the next course of action. I will give that more thought. I know that from a tech debt perspective, lowering exponent is not the solution. In the current (linear) configuration the one main consideration re whether to consolidate 4 similar nodes into one or keep separate is the 21-day down time it incurs. Apart from that factor, you might as well consolidate. And as I said above, regarding that relatively low level of complexity (“to consolidate and face 21 day down time or not to consolidate and eat the extra infra costs”) we might as well get used to since it is a permanent feature of v1 totally independent of PIP-22. But setting exponent to anything less than 0.9 or 0.95 and the answer becomes much more complex which is one of the main reasons we avoided it in the first place .

In retrospect I rather regret introducing the notion of nonlinear in the first place. Poktscan would never have dreamed up and insisted that nonlinear weight is the panacea they are making it out to be if the hook wasn’t there to begin with.

** PLEASE NOTE THAT I RETRACT THE ENTIRETY OF THIS SECTION **

Poktscan has indicated several times that they plan to update this, in an upcoming release, to show actual base reward for 15k bin rather than the “base rewards times SSWM” that they currently show. Then it will match cod3r. IMO it was a mind-boggling design choice to report “base rewards multiplied by SSWM” and label it “rewards” and somehow think that was going to be intuitive or helpful for the community. I try hard to always believe the best but when I see how much damage this one design choice has caused - how much confusion, fear and noderunners looking at their numbers and seeing that it was less than average poktscan showed, etc, etc etc, that I can’t help but wonder if the purpose of the bizarre design choice was not, in fact, to intentionally sow FUD regarding PIP-22 and give node runners the impression that they were getting gypped of what they were due. In essence it is sowing the subconscious message over and over again. “You’re rewards ought to have been around 41 and would have been if those PIP-22 folks didn’t go and mess up the system. Instead you only get 26”

Sometimes I wonder, is linear weighting “I get the same amount of pokt whether I stake my 60k total pokt on 1 node or 4 nodes” really that complex to get? Or is it just the strain of 2 months of being hammered with the incessant messaging that it is broken that is driving confusion and division in the community?

** END OF RETRACTION **

And replace it with the following:

** The data does in fact match between poktscan and cod3r. The two have just chosen two different methodologies to report the data. It is my understanding that in an upcoming release it is PoktScan’s intention to update their methodology to be similar to that currently used by cod3r, at which point the numbers displayed should be identical. **

Sorry, but I disagree. Poktscan had a fair way of displaying on chain data - albiet less user intuitive for some, and they quickly re-iterated on this on release and have implemented multiple user suggestions since the release of PIP-22. Let me be fully clear, the amount of code changes I’d imagine they had to account for in their indexer is not something to be taken lightly, and they made an amazing effort to continue to support the community by providing on chain analytics for node runners to view. UX is difficult, showing the right metrics on a new feature set can be refined. Give it some time, this is technical debt I am talking about.

Just false. I don’t see the need to drop to this level of scrutiny and accustations to act as a counterpoint for the above proposal, and these type of comments will derail the proposal at hand.

I retract what I said and apologize. At poktscan discretion I can delete what I wrote or leave for the record that in this case I overstepped my bounds.

@RawthiL , et al. I apologize again for letting my emotions get the best of me and letting my comments get out of hand. You guys have put in a tremendous amount of work, which I respect immensely . I will focus on the technical issue at hand going forward;

Your expectation was well founded and met prior to my reply. As I promise to lay off any form of throwing barbs, it would be welcome to see that reciprocated.

My suggestion to apply your knowledge gained about nuances within the cherry picker to optimizing the cherry picker was not rhetorical but sincere. You guys probably have a better empirical understanding of the cherry picker than just about any one in the ecosystem. E.g., is the amount of transactions occurring in the flat ramp-up phase of a session causing a QoS problem - then let’s shorten the transition period. It won’t even take a DAO vote; just the making of the case to the dev team to change a cherry picker parameter. I’ve already fixed a couple problems in the cherry picker; I would be happy to collaborate with you guys on further improvements.

Right now, what I promise to do is shut up and re-engage the pdf to see what I’m missing from the first read

After reading through the proposal document, the basic thesis of @RawthiL’s argument is actually quite simple. I’ll share a summary that I wrote for myself to help my understanding, in case anyone else finds it useful:

At the beginning of any given pocket session, high QoS nodes will lose some relays to low QoS nodes, as it takes time for the cherry picker to measure latency and gradually allocate more relays to the high QoS nodes.

Now that PUP-21/22 is active, a 15k node needs to attend far more sessions than previously to earn the same reward. If you’re operating a low QoS 15k node, this is a good thing - more sessions means more relays gained at session beginnings. If you’re operating a high QoS 15k node, you lose more relays for the same reason.

This issue is reversed for 60k nodes because (pokt-for-pokt) they earn their rewards over 1/4 the sessions. A high QoS 60k node loses less reward to lower QoS nodes, and a low QoS 60k node gets fewer opportunities to scavenge relays.

It’s important to understand this in the context of a total POKT daily mint rate that is fixed by FRENS. So the higher reward given to low QoS 15k and high QoS 60k nodes must come at the expense of the rest.

I have no idea if this thesis is correct or not, but I do think it’s worthy of investigation. I’ve read through all the comments listed, and thus far I don’t see anyone refuting the thesis. Nobody has proven it wrong or explained why it is invalid. Closest I’ve seen is @msa6867 acknowledging the effect may be real, but that it is likely only causing a ±10% variation from before. This may be true, but needs to be proven.

Is it possible for the Pocket team to release relay data from the portals which we can then use to independently and quantitively determine the true scale of this effect? Back at InfraCon there was a lot of talk of postgres database dumps, which would be invaluable in resolving this debate. I completely understand we wouldn’t simply want to take the data provided by Poktscan at face value.

Couple of side points:

-

Given this thesis is completely valid and has not been discredited, ad-hominin attacks are totally unjustified in my opinion.

-

IF this thesis is proven to be accurate and the level of disadvantage can be determined quantitively, we should address it. I have total faith that all of us only want a genuinely fair and level playing field for all node runners, big or small.

For what it is worth; The DAO should be funding a project like @steve / Dabble setup on their own to verify the parameters , performance and variance of PIP-22.

I am shocked that Steve had to fund and run this test on his own , it should have accompanied the implementation of these changes showing actual data from real world testing.

I for one would like to see collaboration / help with Steve , overlayed and cross referenced with cherry picker data to validate any of these hypotheses being thrown around.

Second, Steve should probably get some kind of grant/support from the DAO as the real world research and testing he is doing is very beneficial and imo should have been part of the original proposal deliverables.

Agreed! Especially if he is digging into cherry picker data to do so as that takes a lot of work

First of all, absolute kudos to you for taking this stab at creating a summary! That is phenomenal. I cannot answer for poktscan as to whether your summary accurately captures their thesis or not; they will have to answer and I eagerly await their reply. I was going to ask them to please create an executive summary of the form you have created free of incendiary words like “unfair” and what not but just containing the facts and theses.

Whether or not what you write accurately represents poktscan’s thesis, the thesis you present is pretty straightforward to debunk. The problem is in the third paragraph.

I would rewrite the paragraph as follows:

"This issue is exactly the same for 60k nodes even though (pokt-for-pokt) they earn their rewards over 1/4 the sessions. A high QoS 60k node loses one quarter the relays to lower QoS nodes over the course of a day but each loss of relay causes a 4x loss of potential reward resulting in an identical loss of rewards as tat experienced by four high-QoS 15k nodes. Conversely, a low QoS 60k node gets one quarter the opportunities to scavenge relays but each scavenged relay results in 4x rewards that must be accounted toward to FREN-allowed total daily rewards and thus has the identical effect to the scavenged relays of four low-QoS 15k nodes.

I reiterate that I think taking a stab at refining the parameter settings within the cherry picker is a worthwhile spend of time and energy

I totally agree. However I would like to make it clear that this thesis has nothing to do with the proposal which is being presented here and if it were proven to be real, the solution would also have nothing to do with the solution which this proposal is advocating. This discussion / research point should have its own thread rather than being conflated with this one.

This proposal should be withdrawn

.

This is subjective. As you know, the cherry picker and the gateway as a whole, and our protocol network are practically coupled. It’s how we resolved our QoS issues, I thought this was understood.

If the thesis is true, then I do not see a problem with using protocol parameters to address this issue. I did voice my concerns in PUP-21 whether there were any fairness issues when it comes to consolidation - and it was addressed by stating that we could leverage the protocol parameters to address any unfairness, as seen here: PUP-21 Setting parameter values for the PIP-22 new parameter set - #60 by msa6867

Going into it deeper, I did mention if we set it at 1, and there was any perceived unfairness, we would see pushback from 60K node runners when it comes to decreasing it.

These parameters decide whether someone should max out on consolidation or not, especially with linear staking. Bumping it down may not sound as easy as it seems if the majority of the network decides to consolidate to max. I believe there is less friction in being conservative and bumping it up - rather than convincing a load of maxed consolidated nodes that their rewards are going to be cut if we need to dial back.

I agree with @StephenRoss in general, appreciate PoktScan time in all of this, but we can’t take what they say as fact unless it’s been peer-reviewed by other entites. Coming up with a solution is going to take engineering time, data validation, and plenty of cognitive overhead. What that means is in general, we’re put in a rather shitty position, and the whole network and community hurt off that.

I understand, but I do agree with @benvan that this should really be split into two separate proposals - or at least two separate white papers as I think there are two very distinct issues that are being raised:

Issue 1: in light of the nuanced manner in which PIP-22 interact with the cherry-picker in an environment of nodes with wildly disparate QoS levels, would a different set of parameter values and/or a different methodology by which PNF sets those values lead to a better-behaved system than the current values and methodology and if so what would be the optimal settings and methodology.

Issue 2: is the current incentive structure that gives to a node staked to 60k the same rewards as that which is given to four nodes staked to 15k in the best interest of the system (seeing that it leads over time to noderunners almost exclusively compounding their rewards vertically rather than horizontally) or is it better to incentivize only the least cost efficient noderunners to compound vertically while incentivize other node runners to compound horizontally, and if so, what is the optimal parameter settings to achieve the desired balance between incentive to compound vertically vs incentive to compound horizontally.

Neither I nor @BenVan nor anyone else is opposed to a peer-reviewed consideration of issue number 1 and I have committed to go back give the pdf a completely fresh and thorough reading… But I do object to the conflation of the two topics, particularly since the current incentive structure (to make it more cost effective over time to stack vertically vs horizontally) was precisely the expressed will of the community in the approved PIP-22 and PUP-21 given the current environment of being “over-provisioned”.

Even at that, I am willing to take a fresh look at issue number two if they really make a good case that it leads to a better-behaved system, but I reiterate that I want to consider the questions separately and not have issue 2 be conflated with or hidden in the subtext of issue 1.

And why do I say the issues are getting conflated? because the proposed new parameter value (exponent = 0.7 ) is so far out balance of anything that would be needed to address issue 1 that it can only be motivated by issue 2. And yet it is being proffered as part of the deep technical discussion of issue 1. A back of the envelop calculation shows that even if poktscan is absolutely correct in every aspect of their technical thesis, then a value of exponent=0.9 is more than sufficient to restore the desired balance, whereas the proposed value of 0.7 tips the scales so far in the other direction as to cause a system imbalance twice as large and twice as unfair as the imbalance/unfairness they are trying to fix. But I admittedly make this statement prior to giving the fresh re-read of the pdf which I promised and perhaps I will discover there some argument that I missed the first time of why 0.7 (rather than 0.9 or some other value) is needed to address issue 1.