Attributes

-

Author(s): @RawthiL

-

Parameter: [ServiserStakeFloorMultiplier, ServiserStakeWeightMultiplier, ServicerStakeWeightCeiling, ServiserStakeFloorMultiplierExponent]

-

Current Value: [15000000000, variable according to PUP-21, 60000000000, 1]

-

New Value: [15000000000, variable, 60000000000, variable (strictly lower than 1)]

Summary

This proposal intends to modify the current linear stake weight model of PIP-22 and enable a non-linear stake weight model. The mechanism for such change is already included in the PIP-22, therefore this proposal is only a parameter update proposal.

This modification is required to:

-

Address a fairness issue that reduces the rewards of base stake nodes.

-

Enable a stake compounding strategy that is beneficial for the quality of service of the network.

Abstract

We propose the following chnges:

-

Set the ServicerStakeFloorMultiplierExponent to a value lower than 1.0. We propose to change this parameter to a value of 0.7 (under current network conditions).

-

Set the ServiserStakeWeightMultiplier to new value wich is lower than the value obtained from PUP-21. The decrease in this value will be compensated by the decrease of the ServicerStakeFloorMultiplierExponent.

The need for these changes roots in the limitations of the linear model to keep inflation constant without reducing the gain of nodes with base stake (those who decided to not perform any compounding). This forces nodes to perform compounding of stake and this was not the spirit of the PIP-22, we want to correct this effect.

We have analyzed the main mechanisms of the PIP-22 and the effects of the linear stake weight modeling. Our main conclusions are:

-

The linear weighting model penalizes the nodes that do not perform compounding. We estimate that non-compounded nodes are loosing at least ~15% of their rewards.

-

With the linear staking model the only valid strategy is to compound at maximum stake. This creates a de-facto increase of the minimum viable stake.

-

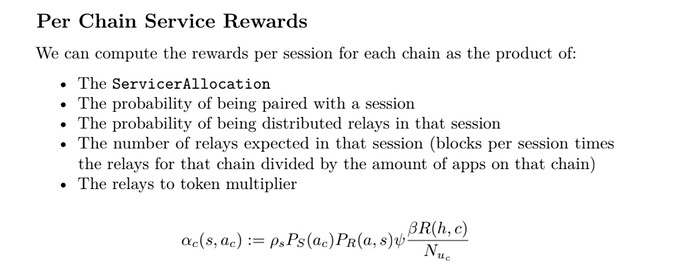

Observing only the variations of the number of sessions by node does not gives enough information on how the number of relays is modified. Hence, the reduction of the rewards for the base stake nodes is not correctly justified in the linear stake weighting model.

-

If the performance of the nodes in each compounding bin is not similar, the calculation of the ServicerStakeFloorMultiplier does not result in constant inflation. Correcting this problem leads to further penalization of nodes in the base stake.

Motivation

By implementing a non-linear stake weighting model we expect to:

-

Repair a fairness issue where the base staked nodes are being pushed out of the system due to an unjustified reduction of their income.

-

Avoid reducing the minting of base stake nodes (and other intermidiate bins as well) due to inhomogeneities in the performance of the nodes at different stake levels.

-

Create a new stake weighting strategy where going for the highest bin is not allways the answer. This new strategy can result in higher QoS nodes compounding less than low QoS nodes, improving the overall network QoS.

Rationale

The justification of the shorcommings of the linear stake weighting model and the justification of the non-linear stake weighting model is long and technical. In order to keep this proposal legible we have created a document to justify the proposed values. Please reffer to the PUP-25_Proposal_Document in this repository.

We leave an index to point the justification of some points:

-

Reduction of base nodes rewards: Section 4.2

-

Observing the number of sessions by node is not sufficient to estimate the increase of relays by node (using a linear model): Section 4.3

-

Problems of linear stake due to inhomogeneities in the performance of the nodes at different stake levels: Section 4.4

-

Changes in staking strategy that can lead to better network QoS: Section 5.3

-

Guidance for setting the new parameters: Section 5

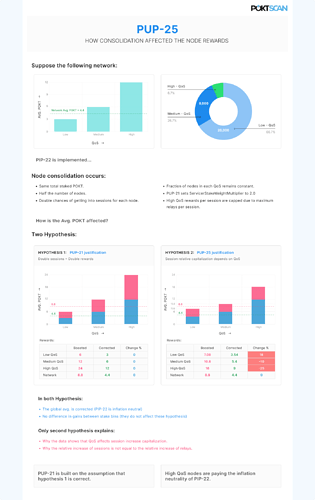

We also created an infographic to understand the core issue that this proposal seeks to solve. Thi infographic shows the problem of PUP-21 to keep rewards constant for all nodes when the QoS affects the capitalization of the increment of the number of expected sessions by node. We show that a small change in the capitalization can lead to big changes in the node gains.

Dissenting Opinions

The “unfairness” problem thats been risen here is only a second order problem.

We dont believe that this is a second order problem since the observed effects are clearly larger than a 5% variation.

The PIP-22 should be transparent to node runners that do not wish to compound. We propose isolate the effects of compounding stake in servicer nodes from the nodes that do not wish to take part in the compounding game. This is the same that happens with Validators, the validatores staking volumes do not affect the rewards of the servicer node runners, it only affects the rewards (selecting probability) of other validators.

The network is currently over provisioned, if the 15 nodes dissapear it will be good for the network. This is a non-issue.

Increasing the StakeMinimum of nodes (or removing base stake nodes from the network) was never part of the PIP-22. In fact, PIP-22 was an alternative to increasing the StakeMinimum. Now PIP-22 is resulting to be an obfuscated way of increasing the minimum stake, not through a hard parameter set, but throug rendering nodes at the StakeMinimum unprofitable.

If the community wishes to reduce the number of nodes or improve network security throug higher stakes, then rising the StakeMinimum shoud be discussed. Anyway, this discussion should be done outside the scope of the PIP-22.

The model is fair, for each 15 kPOKT invested the expected return (before costs) is the same.

We agree, the unfairness problem is not related to the current return per POKT. The problem comes from an unjustified reduction of the base node rewards due to an overcompensation for the increment of expected nodes by session. In other words, PIP-21 expects an increase in the worked relays that matches the increase in the expected number of sessions.

Analyst(s)

Ramiro Rodríguez Colmeiro and several members of the POKTscan team.

Copyright

Copyright and related rights waived via CC0.