Note: this proposal has been edited with the consent of the author (Andrew Nguyen) because an alternate solution achieved rough consensus in the thread below. You can view the original proposal by clicking the pencil icon in the top right.

Attributes

- Author(s): Andrew Nguyen, Adam Liposky

- Parameter: DAOAllocation

- Current Value: 10%

- New Value: grant the Foundation permission to modify the value according to the schedule detailed below

Summary

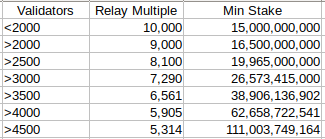

Re-allocate servicer rewards to the DAO as the node count approaches 5,000, to prevent P2P scalability from affecting reliability of service.

Abstract

Even with the impending separation of validators/servicers in RC 0.6.0, meaning consensus scalability is no longer a concern, Tendermint has P2P scalability issues that are likely to cause degradation of service beyond 5,000 nodes. Therefore, to maintain network reliability as we approach 5,000 nodes, we recommend re-allocating servicer rewards to the DAO, to limit the incentives of spinning up new nodes beyond 5,000.

Motivation

We all strive for Pocket to have as many nodes as possible, but there are technical challenges to be addressed on the road to that vision. These are going to be addressed with future features that will be shared soon, but the recent explosion in node counts is an unexpected trend that we will need to counteract with incentives in order to allow for more time to build these solutions.

Solution

To be clear, the curve would stop beyond 5,000, meaning the floor of service rewards would be roughly 9% to ensure that nodes don’t stop breaking even.

The Foundation would have the discretion to make the changes to the DAO Allocation when it sees fit, as long as those changes are in line with the curve above, and will not need to communicate these changes until after the fact. These changes will be announced in the Foundation category of the forum and the #dao-hq channel in Discord.

This method of implementation will avoid the collective gaming issues raised by @Garandor.

Rationale

Currently in the bootstrapping phase of Pocket Network, nodes are receiving bootstrapping-like rewards (very high) to incentivize new nodes to join the network. Well, it seems these rewards did a very good job, so now we need to adjust the incentives.

There are many economic knobs that can be turned to curb the growth of nodes:

- Reducing RelaysToTokenMultiplier

- Increasing the ProposerAllocation

- Upping the minimum stake for Validators

- Increasing the DAOAllocation

1 was the original proposal. Because we’re early in the protocol, some felt that it would be better for the ecosystem as a whole to maintain inflation at current levels so that newcomers have the chance to catch up if they’re effective node runners and the DAO has more funds to reward future contributors with.

One counter-argument to this is that the high inflation is what is incentivizing node runners to run as many nodes as possible, because they want to minimize their dilution:

2 will have little effect, because 1 node with 3X stake = 3 nodes with X stake in terms of block reward. And even if it did, we would be promoting centralization of the network, which is antithetical to our goals.

3 is untenable because it would force unstake anyone who is below the new minimum, burning their entire stake. We’d therefore need to have 100% perfect coordination to ensure everyone is able to top-up their stake, which is unlikely. The risk that even one node runner would lose their stake is not something we should take lightly.

Which leaves 4, the solution that is most incentive-aligned with our goals, and which has the added benefit of increasing the DAO’s treasury, which it can re-invest in the growth of the ecosystem.

Dissenting Opinions

Even at 9% of rewards going to node runners, I believe it would still be profitable to run a node on AWS if you have multiple nodes.

In the previous month, the average node earned about 35 POKT per day at the 89% payout. If we move that to the maximum point of the curve where nodes earn 9% payout, the average node would earn 3.5 POKT per day or 105 POKT per month.

You can do the math with the current OTC price or another imaginary price, but I believe that would still make an AWS node profitable given that multiple Pocket nodes share the more expensive Ethereum node – even at the maximum point of the curve. At any point above 15% payout, I’m positive it is still profitable to run a cloud node and probably would not encourage anyone to shutdown, even people paying retail prices to resellers like C0d3r.

Copyright

Copyright and related rights waived via CC0.