For general information / clarification:

The resent node growth explosion has little to do with the crypto market.

it is almost entirely due to the January 28 coin unlock date. These guys are throwing up nodes with little if any prior research into the costs vs. returns.

He’s completely right

And so is Jack here:

My initial comments were incorrect, I apologize and have crossed them out to emphasize it

It’s clear that at the current pace, we’ll hit the cap between 10m - 20m daily relays without any POKT price changes.

@Andrew can you clarify what exactly happens when we hit the cap? I was under the assumption that the protocol will choose the top 5,000 staked validators by stake weight to participate in consensus, leaving the rest unable to service or participate in consensus.

If there are more nodes still staking, but not participating in consensus will this create the peering/crash issues you mentioned in the OP, creating this unknown risk?

If that is the case, this will create a natural consolidation of nodes by the largest POKT holders into fewer nodes, effectively increasing the minimum stake to participate naturally. This will crowd out the lowest staked nodes who cannot reach past that minimum without purchasing more POKT.

It should result in a drop off of these minimum-staked nodes, capping the long-tail risk of network-wide peering issues. If I had to guess, the number of these individual nodes who haven’t earned enough POKT to say, double their minimum stake to 30k would be in the low hundreds at most.

That said, if we need to make a change, I believe the cleanest parameter we can change to curb node growth is increasing the proposer allocation, not decreasing the inflation reward. This will create the same consolidation effect described above while significantly slowing down the road to the 5k cap.

The challenge with increasing the proposer reward is that this increases the centralization of distribution of POKT amongst the largest holders, instead of keeping it the even playing field that it is today for newcomers. I’ve described the current way POKT gets distributed in this discord comment.

The largest node runners are already receiving a larger proportion of the rewards already by running as many as several hundred nodes per individual. In the current state, newcomers are still gaining market share in relation to all other POKT holders, though I believe this would exacerbate the situation by decreasing the rate of increase compared to the largest node runners.

At first blush, I would opt for naturally hitting the cap without any changes so consolidation occurs naturally. Alternatively, I would opt for a tiered approach recommended by Jack for increasing the proposer reward to buy time as we reach the cap.

We’ll need to do some modeling to see how this plays out in practice.

Okay so there are no definites here, just things we have observed with RC-0.5.0 testing at +5K Validators

Due to P2P bottlenecking blocks (at the RC-0.5.0 default config) were unable to be produced. Peers hung indefinitely in a permanent chain halt. A few configurations were tried, but certainly not exhausted.

Then we get to the theoretical portion of what will happen +5K validators.

After hitting the cap, Validators who have the lowest stake will become ONLY servicers, which is an unintended consequence of the legacy Comsos feature:

max_validators. This is also referred to as ‘on the fly service to consensus separation’. The biggest con of this new state is that we completely lose online Servicer guarentees for those who are not Validators (servicers can be dead indefinitely without consequence). This state is currently being tested for stability in our 6.0 load tests and is a bigunknownat this time.

As Jack pointed out this will have no net effect

1 node with 3X stake = 3 nodes with X stake in terms of block reward

This is a bit like Luis’ 2nd approach to which my response is the same:

I agree with the conclusion the increasing proposer percentage will provide no benefit here.

I also strongly agree with Andrew’s position that ( even though we may have good reason to believe that a properly configured 0.5.2.9 network will exceed the tested 5.0 max) simply putting the pedal to the metal and see how fast it goes is not at all an advisable course.

Note: 0.5.0 was tested at 5,000, but the chain nonetheless halted several times and flooded itself at much smaller numbers in real world use.

Note #2: This network has - as far as we are aware - never been attacked intentionally. Just saying…

Hello, here’s 2 uPOKT about the 5000 limit issue :

About the Servicers and the Validators:

-

This could be a long term solution to the current issue, to be able to serve additional blockchains, continue to increase the decentralisation without risking the failure of the consensus, while still incentivizing the validation of the chain, with the largest staking nodes (and we think of a new way implement validation in another phase).

-

But it would take to long to implement this, considering the urgency and risk of the 5000 limit.

About the multiple levers to decrease the rewards for relays:

-

Would it be by increasing the Proposer allocation, or by decreasing the RelaysToTokensMultiplier, the overall goal of the DAO is to decrease the profitability of Node Runners, in order to de-incentivize them to spin up more nodes, trying to target the thin limit where the network is at the equilibrium (under the 5000 nodes limit) between the rewards and the number of nodes that share those rewards.

-

But some parameters are outside the control of the DAO, and those are mainly the volume of relays sent by the Apps, and the price of the POKT token. If those would increase, we would be back to square one, with profitability rising up and the DAO trying to indefinitely adjust those rules (Allocation, and RelaysToTokensMultiplier) to control the profitability of Node Runners to avoid the 5000 node limit.

-

This adjustment of those parameter rules could be automated, in a similar way as ETH2 staking APR is controlled, but that would require extra consensus across the community and DAO.

-

If there is an important lag between the implementation of the new parameters and the reaction of the network, to cut nodes or stop launching new nodes, this could appear ineffective to stop the increase of new nodes in time. And since we can’t proceed by trial and correction (meaning that we would oscillate around a targeted node value, and risking going over 5000 nodes, like that: Image, we would need to react quickly and have stricter adjustments, or adjustments towards a lower node number value (like 4500 for example).

-

If we come very close to the 5000 limit (4800 for example), following the same logic, we would need to lower the rewards to a point that forces operators to cut their nodes, and reaching equilibrium under 5000 nodes, while still looking at Relays Volume and POKT price that could increase profitability.

-

wPOKT incentives : As I mentioned decreasing the rewards significantly to avoid network-wide catastrophic failures, this could bring the incentives of node runners to a lower level than staking wPOKT on Ethereum for Apps, and introduce a new challenges to this issue. As far as my comprehension of wPOKT goes, it wouldn’t be beneficial to have an ecosystem were staking wPOKT for Apps (and not providing infrastructure) is more profitable than staking POKT for running nodes.

A more long term solution could be to increase the minimum staking amount required to run Pocket nodes, but this solution brings some hurdles as well:

-

This would by default reduce the number of nodes that the network will be able to start.

-

For single-node runners, they would have to buy more tokens to continue participating, for many nodes runners, they would probably aggregate nodes to reach the minimum staking value.

-

As Michael pointed out, some node runners operating at the current minimum stake value (15,000 POKT + epsilon), might not be reactive enough to get out of staking (by unstaking, or by restaking at a higher value) and see themselves forced unstaked and their stake burned. This would be catastrophic for those participants.

-

In any case, they would be forced to unstake, and then restake at a higher value, to be able to participate in the network, which would, with current parameters, induce a 21-day unstaking period. If this behaviour is broadly adopted by participants, would it risk causing congestion, an important drop in the number of nodes, and a lower success rate of relays? This would also require a lot of management for node runners, and communication and planning, to be sure to avoid, at worst, being forced unstake, or left out of the consensus and participation to the relays.

To conclude, I feel like the manipulations of parameters to reduce the incentive to start new nodes is the simpler approach on the short-term, but would be a pain to manage on the long run, and that the increase of the minimal stake to participate could be a solution in the long run, but very hard and dangerous to implement without risking harming some participants of the network.

I would favour the manipulations of parameters to reduce incentive in the short term, and hope that the separation between the Servicers and Validators arrives soon enough to quit manipulating those incentives and bring economic stability to the network and to node runners.

Hope this help the discussion!

In lobbying for the little guy a bit - and continuing to preach the gospel of decentralization - I’m opposed to increasing minimum stake to curb node numbers as it would significantly centralize the network. Smaller operators would simply leave.

Instead of making entry into the network harder, I would argue that curbing node numbers should be done primarily by reducing the profitability of running nodes, i.e. reducing the inflation reward per relay to the node operator. Since simply reducing overall inflation doesnt help reduce profitability, it should be done by increasing the DAO allocation percentage depending on how close node number gets to the limit. Possibly to some absurd level like 80% DAO, 1% proposer, 19% service node.

This reduces node profit, forcing inefficient operators with low profitability to shut down first and has a positive effect on the network funding future projects: win-win

Operators that stay online will simply donate a larger share of their work to development of the network, which ultimately will benefit everyone involved. It is also simple to change this parameter back in case more nodes are needed

@Garandor & @arnaud-skillz. Thank you for the insightful feedback.

I generally agree with the steps to be taken as outlined by Arnaud and Garandor. I have my concerns about raising the minimum stake for nodes to Garandor’s point. I think that undercuts decentralization and the bottom end of the user market. Plus the organizational hurdles a move like that would cause on short notice would be hard to digest.

As others suggest, the separation between servicers and validators seems to be the best solution in the mid to long-term. I would support this move. I’m also suggesting one other technical (non-economic) fix below.

Through my own personal analysis and modeling, a multi-pronged approach seems to yield the best results in the most amount of circumstances - in the short term until a technical fix can be implemented. I’ll be releasing the model after some additional feedback, but the net result is three suggestions:

- Reduce the RelaysToTokensMultiplier by as much as 60% - my models indicate that smaller reductions don’t stem the tide in the long term or with significant POKT price changes.

- Increase the proposer allocation significantly to somewhere in the range of 25% - 45%. This encourages consolidation among node runners with large stakes.

- The final, and most technical suggestion, is changing the way proposers are selected. Currently, this is calculated by

(round down(node-stake / Avg node stake). Changing proposer selection from mean to median would have the effect of granting many more tickets to large node stakes without needing to change the proposer reward %. It would also lead to consolidation for large node runners. If my recent numbers are correct, this would change from an average (unjailed) node stake of about 40K POKT to a median of about 15K POKT. Large node stakes would then benefit greatly from consolidation, especially with suggestion 2 in place.

Overall, the most adaptable strategy (highest probability of providing stability in all scenarios) seems to be doing all three. That said, we should be cognizant of the effects of these for node runners of all sizes.

More to come.

@adam (pikpokt)

Point #1: Perhaps yes. Perhaps no. From plenty of interaction with the parties putting these new nodes up. Yes, they “want” to make a profit, but many of them are not making the decision based on the current rewards. They are spinning these nodes because their coins unlocked on Jan 28th and they want to run a bunch of nodes so that they are positioned to take advantage of an expected increase in relays and to offset their percentage loss caused by inflation. They have ZERO capital cost to spin these nodes only operating expense to consider.

Point #2: Took me a while to duplicate your numbers but they are correct. Nonetheless I strongly disagree with this method of adjusting incentives as being extremely unfair to those who bought or plan to buy POKT to run a node. The Whale Wallets have huge stake because they have no choice. They were intentionally limited to 3 nodes to stop them from owning the network from day one. If you bump the proposer payout to the level you are recommending, they will drive all the small stake nodes out of business.

Point #3: I can duplicate this math as well. And - in my opinion - it is far worse in terms of creating unhealthy incentives. I show fun games like spinning up low stake nodes just to get the median to drop so that you get a higher multiplier. Only works if you are a whale though. In any event… this would be a consensus breaking change… so it cannot be addressed in the short term.

My conclusion at this time is that exponentially increasing the DAO allocation as we approach 5,000 is the best option that has been presented so far.

@BenVan Your points are well taken. I believe there’s a balancing act we need to strike in the short term until a long-term fix is in place. We may need to make a sacrifice non-ideal economic incentives in the near term in order to secure the long-term existence of Pocket Network. My opinion is that once the long-term fix is completed, we can roll back any changes that the DAO isn’t in favor of.

Can you explain why you believe this is superior to decreasing the RelaysToTokensMultiplier? This has the effect of keeping the inflation at the same rate, assuming relays stay at the same level or increase meaning that node runners/apps would be diluted for the benefit of the DAO (which may be a good thing). By decreasing the RelaysToTokensMultiplier we stem the increase in the total supply of POKT, minimizing dilution which also minimizes the amount of nodes Whales need to run in order to match inflation.

I think it boils down to this notion  which I am still trying to understand. Presuming that relays/node remain similar or increase (due to network relay growth or nodes falling off), rewarding less per node via the RelaysToTokensMultiplier change appears to have the desired effect of reducing node profitability. My point is that the net effect remains the same with the important exception dilution is decreased.

which I am still trying to understand. Presuming that relays/node remain similar or increase (due to network relay growth or nodes falling off), rewarding less per node via the RelaysToTokensMultiplier change appears to have the desired effect of reducing node profitability. My point is that the net effect remains the same with the important exception dilution is decreased.

To Benvan’s point, node runners are taking a very long-term view when thinking about the payback period so they are not only evaluating month-to-month profitability, but a drastic change to this number should lengthen that payback period putting pressure on both whales and small node runners alike.

Re: the median comments - this isn’t a near-term fix, but I think it’s more adaptable than it initially appears. Averages are subject to extremes, whereas medians have the effect of ignoring extremes. There’s already games with the average that aren’t being considered. If someone where to accumulate a massive amount of POKT, say 30M POKT and stake it in a single node, they’d drastically increase the average stake massively decreasing the probability that even other whales can be the block producer. Based on my numbers, adding one node at this stake amount would ~1.5x the average stake or decrease everyone else’s odds of producing the block by ~33%. I’ll admit this is an extreme scenario, but it’s illustrative of the point.

In the scenario you mention, trying to game the system by creating many small node stakes, the change in the median may decrease from 15,009 (according to my math of mid-Feb numbers) to 15,001, which is a comparatively minute change when considering the change that might happen with an average. The amount of effort it would take to make this work would be prohibitive.

That said, I could get behind @Garandor’s proposal of scaling up the proportion of the DAO allocation as nodes near 5,000 as it seems to have the least amount of downside.

It feels like the two main objectives are, in order of priority:

- Prevent a total collapse of the network;

- keep the community happy.

Regarding preventing a total collapse of the network

I think this is a sensible approach. Do we have a proposal for a scaled approach at certain milestones, eg rising exponentially every 250 nodes from 2,500 upwards? I know that @JackALaing and @BenVan have mentioned this.

Do we have numbers on the current health of the nodes staked in the network? I.e. what proportion are jailed versus unjailed? I realise that there are over 2k nodes staked in the network at the moment, but I’m not clear as to how many are actually unjailed and servicing relays effectively.

This may be distracting to the main discussion but I also worry about a tail risk whereby more professional node operators start to shut down operations when node running becomes less profitable, but node runners who do not fully understand the economics stay up and running, causing additional network issues due to poor service for applications.

Or is it naive to think that wPOKT will attract those who are not willing to invest the time into running nodes properly on Pocket?

On the last point: keeping the community happy

I think this point is important

We should strive to support every node runner from big to small and I agree that we shouldn’t increase the barriers to entry in terms of the minimum stake at the moment.

However, to push back against the comment below:

raising the proposer allocation from 1% to something higher could make sense as part of a package if also combined with education to new node runners about the benefits of staking a higher sum than 15k POKT per node to increase their chances of earning the validator portion of the relay reward.

It doesn’t need to be in the range of 25-45%, but something in the range of 5-15% could be sufficient to raise the minimum staked per node across the network.

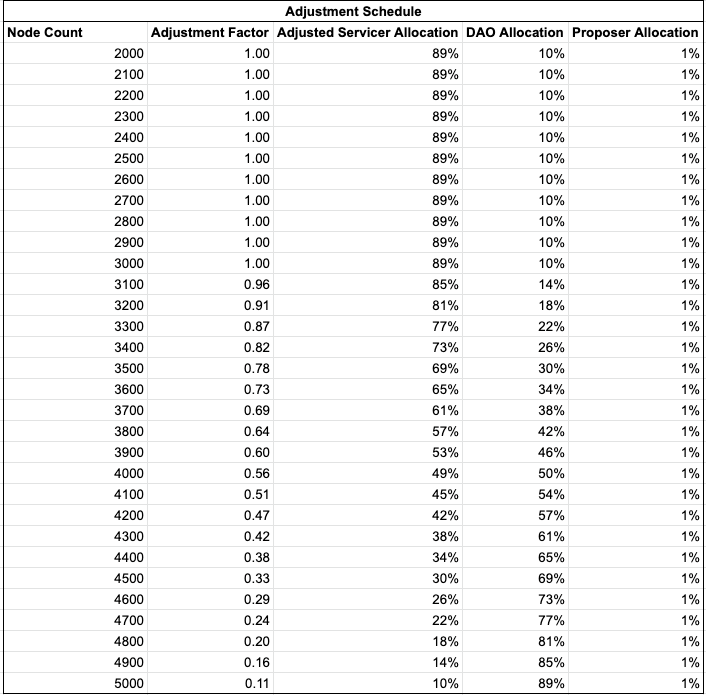

A lot of good suggestions have been made thus far. In order to drive the conversation forward, I’m suggesting two adjustment schedules - ways to adjust the servicer allocation based on the node count.

Based on the suggestion to allocate servicer allocation to the DAO in increasing amounts as we hit node growth milestones, I’ve created a simple schedule and calculator that could inform adjustments moving forward:

The logic behind the schedule is to dramatically shift servicer allocation to the DAO allocation as node count increases, culminating in a flip in allocations between node servicers and the DAO as the count reaches 5000.

At the extremes, this schedule could be adjusted to 0% allocation to servicers at 5000 (as opposed to the 10% shown above) which would act as an option of last resort. Demonetizing node servicing should be avoided in my opinion and I believe the suggested schedule would properly avoid this scenario.

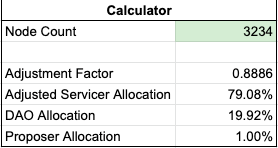

Although crude, implementation could be handled manually, adjusting the values daily or weekly according to the schedule. The node amount would simply be entered into the calculator and it would spit out the appropriate allocation amounts.

The calculator is illustrated below:

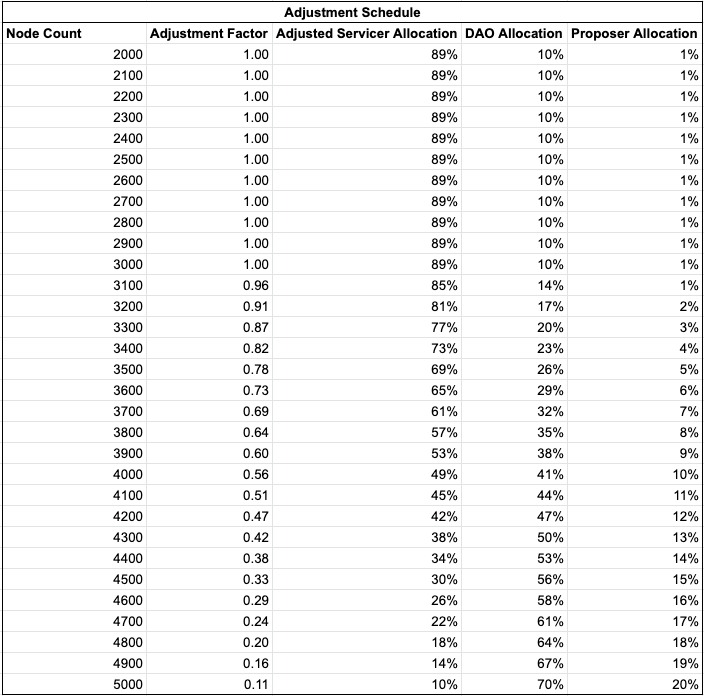

As an alternative, I’ve created another derivative of this schedule that splits the servicer allocation 75/25 between the DAO and proposers for discussion purposes:

You can find the sources for these calculations here. If you’d like to create your own version, make a copy which will give you edit rights.

Thanks for pushing this forward Adam.

Could you explain how the Adjustment Factor column is being calculated? Is it just creating linear adjustments between 89% and 10%?

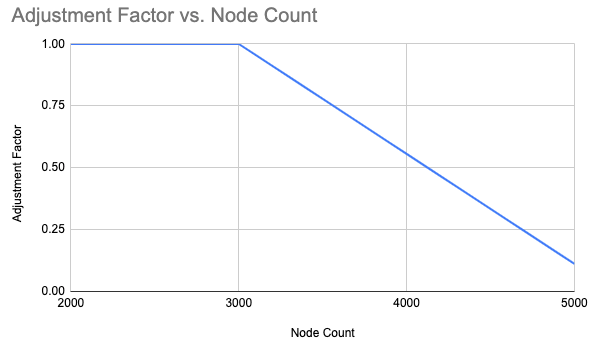

Yes, it’s a simple linear decrease based on the number of nodes being run at any given time.

We could get more complicated than this, but this is nice and easy for node runners to understand and I think it does the job.

this opens up to collective gaming unless the readjustment time is kept random and secret.

node runners could shut down their nodes before snapshot and fire them up right after to enjoy a week of higher rewards than they should get.

Also, not announcing it goes against transparency and I can already see discord blowing up with questions ala “why reward so low :((”

Outside of a controller smart contract i don’t see an easy way to do this transparently though, so secret random readjustments that are communicated after the fact are probably the lowest hanging fruit

Also I would argue for an exponential curve as we don’t want to disincentivize 3000 or even 4000 nodes, they just shouldnt hit the limit, so “punishment” should increase the closer we get to that

Can you clarify if these non-consensus servicers are still able to submit claims and proofs for their relays?

Yes, this property of ‘servicers’ will be completely intact

Okay so here’s the latest:

After running load/functional tests on RC-0.5.2.9 we were able to identify that service and validator separation did NOT work out of the box.

With a small modification to the incoming RC-0.6.0 release we were able to successfully separate Servicer’s and Validators.

Pros:

- We can limit the # of Validators until P2P scalability of Tendermint improves.

- Servicers can operate independently without the burden of signing blocks

Cons:

- If max_validators exceeded, servicers cannot be jailed or punished for being offline. This degradation of service can be quite detrimental to Applications. NOTE: Upcoming updates do solve for offline protection see Timing section below.

- Since the implementation is still Tendermint P2P, the scalability problems for non-validators still exists. Meaning at some (unknown) capacity, servicers will have difficulty maintaining service.

Timing:

Con 1 is sufficient enough to provide a case for a delay in lowering the max_validators. By Q3 of 2021, Pocket Network expects to have the solution for Con 1 implemented.

If # of Validators were to exceed 5K by Q3 of 2021, the separation will happen naturally resulting in the introduction of Con 1 to any ‘new’ Validators.

After Con 1 is solved, I believe reducing the # of Validators significantly makes sense to ensure consensus is prioritized.

Thanks, Andrew.

With this in mind, I think it still makes sense to control the number of Servicer Nodes as we still don’t know what the count might be that would jeopardize stability. Additionally, we lose that all-important off-ramp for these nodes that comes in the form of jailing - which is the main vector for leaving the network.

I suggest coming up with a new Max Validator cap somewhere under the 5000 validator threshold and a rewards reduction that is complementary to that. That said, it’s no longer mission-critical that we keep total node count under 5K, we may just end up with degradation of service if we do.

@Garandor I’ll see what I can come up with that is expontial, to your earlier point.