ok. In that case we can lump the data from the two server location together and get double the sample size (bin 4 = 30 nodes rather than two separate 15 nodes) and thus reduce random variation by about 30%

I rxd data from poktscan on the dabble experiment and provided some private feedback on that. I will switch attention back to pup-25 and the pdf. Hopefully by Monday I will have some feedback

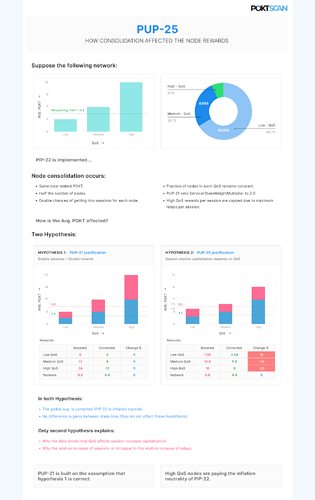

We have created an infographic to try to explain where is the issue. As I said before the problem for PIP-22 is to keep rewards constant with respect to the rewards before the reduction of nodes.

In the image you will see an example of how a small change in the way the QoS affects the capitalization of the node reduction can lead to big changes in the node gains.

If either hypothesis is true, it is not caused by linear stake weight and cannot be cured by non-linear stake weight.

Please submit a PIP or PUP or PEP which relates to the QOS (IE Cherry Picker).

This proposal should be withdrawn.

Yes, the problem is not caused by linear stake weight by itself. It is the effect of the interaction of linear stake weight and the inflation constraints of PIP-22.

If linear stake weight cannot fulfill the constraints of PIP-22 then it should not be used.

And yes, It can be cured by non-linear stake weight, as we shown before. I don’t understand why you say it cannot.

Even if I had cause to believe that your data set were sufficient in size and appropriate in scope, (which I do not) , and if I agreed with your conclusions. I would nonetheless totally disagree with the proposed solution.

Your logic appears to read like:

- more “bad” nodes are in bucket “A” than in bucket “B”

- therefore we should punish bucket “A” and reward bucket “B”

This is an inappropriate solution which, if taken, would not lead to the results you seek. It would simply cause more nodes (good and bad) to move to bucket “B”

If this issue exists, it is a QOS/Cherry Picker issue, and that is where it should be addressed.

This is not a problem of stake buckets. I made it clear in the document and infographic. The stake buckets were never used to justify the unfairness. They were mentioned as secondary problem. I almost regret to mention it, since the focus was put on that part and the rest was ignored.

Forget the stake weight buckets, they are not the root issue.

The issue is that the nodes in the Pocket Network have a large QoS dispersion. Moreover, the majority of the nodes had a bad QoS. This is what creates the problem. With such QoS uniformity, and a non-linear element as the Cherry Picker, it is naive to think that a linear model of the session-to-relays interaction can fit.

I agree with you, this is a QoS problem. If all nodes in the network had a similar high QoS, then this problem disappears (or can be ignored). We are also trying to achieve this, with concrete steps. I believe that we can achieve this with an open and collaborative community.

I also agree that this is a QOS issue.

It should be researched and discussed as a QOS issue.

The fact that you admit it has nothing to do with buckets does not change the fact that this proposal seeks to drastically modify the bucket payment system as a “concrete step”.

Please propose something that address the problem and incentivizes people to improve their QOS rather than just arbitrarily moving money from bucket A to bucket B.

Changing a single parameter that was designed to be changed is not arbitrary. In fact, changing this single parameter does both things, reduces the issue that we are presenting and helps improve the overall QoS of the network (see section 5.3 of the document). The money moving from one bucket to an other is a second order effect.

If you are also seeing the QoS problem, you could help the Pocket Network fix it. I look forward to see a PIP/PEP/PUP/Contribution that helps fixing this issue. I will gladly withdraw my proposal in favor of it.

I do not find this infographic helpful. (1) It just shows some randomly made up numbers in the right column (2) falsely claims that there are no alternative hypotheses to the “pup-25 justification hypothesis” that can explain the data. (3) brings up a thesis that I cannot find anywhere discussed in the white paper, namely “High QoS nodes are paying the inflation neutrality of PIP-22.” Admittedly this thesis is one and the same that @StephenRoss presented his summary of a while back, so I must be missing the right document to review? I have been reviewing the document titled “Non-Linear Servicer Stake Weighting” under the file name “PUP_25_Proposal_Document.pdf” Is there another document I’m suppose to be looking at?

Assuming this is the right document, can you please point to where this thesis is discussed?

@RawthiL , I have finished reviewing he 28-page pdf (for the second time). Would you be willing to recompile the data in section 4 in a format that I would find more useful?

(1) can you please present <R_p_c> weighted by R_tot_c over the averaging period rather than the unweighted. Rationale: unweighted gives undue bias to the small “nuisance” sessions that earn a fraction of a POKT rather than the less frequent “home-run” sessions that return the bulk of rewards. These small-relay sessions have over-exaggerated cherry picker transition-phase effects compared to the large-relay sessions while contributing only a tiny amount to the daily rewards. And as you know, weighted <R_p_c> is simply <R_c>/R_tot_c, so it is much easier to calculate… Node runners care much more about this value rather than the unweighted sum, which is only useful, really, for analyzing the cherry picker.

(2) Can you please provide <S_c> summed over all 8 chains and relay-weighted <R_p>the over all 8 chains. Rationale: In all my attempts to analyze relay and session data, I found it to be a futile exercise to try to limit data to the “best” chain… the loss of data by focusing on a single chain always ends up giving worse statistical significance compared to using all the available data. In the appendix you come close to providing this data, but unfortunately you provide unweighted averages, which is rather meaningless. Weighting by total relays collapses the entire relay percentatge equation down to <R_p> = <R.>/R_tot so the work is much simplified, and, not too coincidentally, is the only number that node runners really care about.

(3) You indicate you analyzed 13 providers, but in all the data, there are only 10 included. I do not know what happen to the other 3 providers. Can you please provide the results that cover all 13 providers.

Results akin to figure 2-4 fo the above would be great. And if you want to present the equivalent of figure 4 in mean/std format, that would be great.

(4) You can dispense with presenting any “coefficients of determination” massaging of the data, as this takes a big step backward in providing meaning. Rationale: any provider that “overshoots” the nominal value of relays/session=1 in figure 4 (see the one provider sitting up at 1.9) gets treated to the same low D-value as “undershooting” would earn, thus I have lost information between figure 4 and 5 since in figure 5 you can’t tell if a value is due to overshooting or undershooting…

I appreciate your willingness to re-present the available data. I doubt it will change the narrative much, but it should go a long way to cleaning up statistical significance.

The number of nodes in each category corresponds to pre-PIP-22 network. The number of avg POKT is arbitrary. The numbers have the purpose of illustrating the scenario without complexity. I can change the scale to reflect actual averages but it wont have any effect (as you already know).

I do not claim that there are no other hypothesis that could explain the data, I only show the only two that were proposed.

Please share and justify other hypotheses that can explain these effects.

As I have repeated many times, this is not included in the document because we do not have a solid evidence of this.

We created the infographic since the community was interested in possible explanations of the behavior that we are showing. It is a simplistic explanation of the problems that we observe.

I don’t know what are you referring to. Please share this summary so I can read it.

Thanks, I believe this is a compliment. I would love to see these kind of documents describing the rationale behind important changes in the network. Going through forum posts, telegram/discord chats and recorded oral presentations is not an easy nor fail-proof task.

Are you referring to equation 11?

If I understand correctly you want to represent R_p_c as the total relays done in a given day and chain divided by the total number of relays in this day?

You are asking to:

-

Produce a value S (no _c since it wont refer to a given chain) which is created by summing all the sessions done in a given day across all chains.

-

Produce a value for R_p which is the sum of all relays in a day, for all the chains, divided by the total number of relays done in that day, for all the chains.

Not all providers have all the chains. This is another reason why by-chain analysis is more robust. In the provided data you will find that there are 13 unique provider tags.

This is referring to the new metrics that you asked for in points (1) and (2)?

I don’t understand what do you mean by “massaging”, can you elaborate?

The coefficient is not measuring under- or over-shooting. As you know, you can have a linear behavior that creates large over shoots. The relation A*9 = B is linear, with x9 “overshot”. The same happens to A*0.01=B, a x0.01 “undershot”. In both cases the determination coefficient is close to 1.

The objective of using the determination coefficient was not to determine wether there is under- or over-shooting. It is used to show that the linear model does not fit the data.

I will try to provide this but I think that it can be done with the data provided in the repository.

I have doubts in the significance of the results of the numbers that you asked for in point (2). Mixing the chains is not a good idea and the stability of many of the other chains was rather lacking.

Here is the summary that @StephenRoss provided…I don’t remember you every engaging this to see if it was a correct capture of your thesis (which as you just pointed out is not a thesis in the pdf but seems close to a point you bring up elsewhere including the infographic)

Regarding clarifying what data presentation I was requesting, I will dm so as not to add unnecessarily to this thread

I have done this before; I will do so again here.

Hypothesis One:

Qos (latency) improved significantly over the course of the summer, leading to greater competition within the cherry picker for relays, thus leading to avg relays per session going down over the same period as, and coincidental to, average number of sessions per day going up. While the QoS shakeup and improvement was most likely largely precipitated by the approval of PIP-22, this effect is completely independent of actual PIP-22 turn on and will be evident in the data long before the Aug 29 turn on date . This decrease in cherry-picker selection probability hits all QoS levels in exactly the same proportion, resulting in no fairness imbalance between high/med/low QoS classes. Note that this is completely agnostic to bins, because all this takes place before there were other bins besides 15k

Hypothesis two:

Avg QoS is better among node runners who consolidate to 30, 45, 60k nodes as compared to the avg QoS of 15k nodes. This leads to higher cherry picker probabilities for all node runners, independent of bin and Qos level, as compared to what the cherry picker probabilities would have been if all the pokt staked to 30, 45 and 60k nodes were instead spread out to identically configured 15k nodes. This leads to an overminting of tokens compared to inflation target when using avg_bin to calculate SSWM. Since the overminting hits all bins and all QoS classes exactly proportionately, there is no unfairness across bins or across QoS classes and a simply adjustment to SSWM will suffice to correct the overminting without adversely affecting any class of bin or QoS. Unlike hypothesis one, for which the pip-22 turn-on date has no bearing, hypothesis two is limited to the post-turn-on environment.

Hypothesis one is easy to prove qualitatively. It will take more work, though to try to quantify the effect to see how much of the data it explains. I will work on trying to quantify that.

That QoS improved significantly over the summer has been shown previously.

In analyzing the data from the summer, the three largest contributors to this improvement in QoS that I have found are:

- one major provider accounting for ~20% of the pre-pip-22 nodes who had outlier low QoS suffered 75% attrition over the summer, significantly raising the avg QoS of the network

- ~two to four initially niche, high-QoS providers grew in new node count significantly (~5-7% of current node count) over the course of the summer as the unstaked pokt represented in event 1 made their way to them as replacement providers. Events 1 and 2 were largely precipitated by pip-22 approval as I have shown previously from public notices made by a large pokt custodian coincident to the approval of pip-22.

- completely independent of anything having to do with pip-22, one major provider representing about 6% of the current node count massively upgraded their QoS over the course of the summer resulting in an almost doubling of their cherry-picker probability. (This is very likely the outlier data point seen in figure 4 of poktscan pdf sitting up at 1.9x reward increase compared to session increase)

I will try to quantify the effects of the above three events in the upcoming days

Re hypothesis two, I think it is evident from Table 6 of the poktscan pdf that the QoS is lower in bin 1 than bins 2-4, or at least was in the time frame of your study, (though as you have elsewhere pointed out, the leading bin-1 provider has worked greatly to improve their QoS over the last 30 days leading to a smaller differential now compared to the dates on which the pdf data is based. ) Slide 13 of my presentation that you attached spells out the mechanism of how this leads to identical increased cherry picker probabilities across all bins and QoS levels as compared to taking the pokt in bin 2-4 and staking it instead to 15k nodes. I understand this falls short of “proving” my hypothesis, so I guess it will come down to finding evidence or not of unfairness between bins or unfairness between QoS classes (compared to the intentional “unfairness” of the cherry picker algorithm). So far, no evidence of the latter has ever been put forward despite the charge of unfairness being all but ubiquitous.

In the same vein, I plan to annotated my comments directly to a copy of the pdf and post; I think that will help keep the thoughts in one place rather than have to scroll the comments section. Give me a couple days to get that prepared.

I have completed thorough review of the PoktScan pdf. I have consolidated all comments onto a markup of that document and placed it at the following link:

I have left it in *.docx format rather than convert back to pdf so that PoktScan can markup in response if they so choose. The most important sections for new material that has not been rehashed before is found in what I label as Appendix 2 and 4.

tl;dr - Nothing has changed in my earlier assessment. I find no basis for the claims of unfairness or reduced rewards as a result of PIP-22 / PUP-21 and therefore no merit to the proposal to lower the PIP-22 exponent (as a result of setting “Kfair” to 25 to 35% ) or to use the proposed “Algorithm 3” methodology to calculate SSWM or SSWME.

Thanks for the review @msa6867 , you took the time to went through all the document and discuss it with us. You managed to find some typos that I corrected in the new release of the document.

However I’m puzzled on the focus that you take when providing feedback. I had private chats with you and I provided you with guidance and data. I had the impression that you understand the core problematic that is being discussed and I believe that your formal background should be sufficient to provide clear critics to it.

Sadly, I find that discussing this subject with you is becoming circular. You seem to fail to understand the root of the problem in PIP-22 / PUP-21 or you are deliberately avoiding to address it. Your review chooses to focus on three pillars:

- Analyze averages by stake bins, a subject that has nothing to do with our document. We stated this many times. The problem is QoS, specifically high-QoS nodes in base stake bin.

- Ignore the statistical proof of non-linearity.

- Analyze over-minting due to QoS differences across stake bins, a secondary subject that was partially resolved with GeoMesh (and other similar products from other providers).

While our document, and proofs, base on:

- Differences of increased rewards between nodes with different QoS (reduced gains of high-QoS nodes compared to low-QoS nodes).

- Showing that modeling the Cherry Picker as linear is incorrect. Primarily through a specific statistical indicator.

- Showing that PUP-21 requires the previous point to be true in order to provide a fair correction.

I will address the main issues with your analysis, refraining to enter in subjects that are not pertinent to the correctness of our work (such as ranting about the number of nodes before PIP-22 or the minting by stake bin).

I will divide this post in three, first I will create a short TL-DR for the broader community, then I will make two general comments on the analysis that you provided and finally I will respond to the appendixes that Mark wrote.

TL-DR

Our analysis wanted to answer two simple (sort of) questions:

1- Is the PIP-22 / PUP-21 model being fair with nodes (regardless their QoS)? Or, Are my rewards the same as before (regardless my QoS)?

2- Is modeling the Cherry Picker as linear correct? Or, do an increase in sessions by node of X% translates into an increase of relays of X%?

The analysis provided by @msa6867 do not address neither of this questions.

To answer the first question, he focuses on using a macro view of the Pocket Network, ignoring division of QoS in their analysis. Instead of analyzing node runners grouped by their QoS, he merges all nodes into a single group. This is what he call “weighted average”. This is the same as taking a group of N random nodes from the network and adding them all as a single entity. It is evident that this metric is not addressing a problem of fairness within different node runners. Also, by doing so he achieves a trivial and expected result that the number of relays by node stays constant. No surprise here, the Pocket Network is a closed system, the relays need to go somewhere, he only proved that the sub-set of node runners used in our analysis is correct. Finally he uses this value as the basis of his thesis showing that there is no fairness issue, but it is only a single metric, how can a single metric give us any insight on how the metric changed in two groups of node runners (low and high-QoS)?

The second question is completely ignored with some weak critic over the statistical metric that was proposed. This is very worrying as dismissing such metric requires giving proof that is not applicable. Also, he asks us to apply it on other segments of time, knowing (I hope) that it will shield very different results. This is expected, if you do not expect to see a strong change in one of the variables (number of sessions by node in this case) you will be measuring noise. There is not an other time period where the number of nodes was reduced so largely, the time frame chosen to apply this metric is the best one in the history of the Pocket Network.

Finally, ignoring the statistical proofs that show that modeling the cherry picker with a linear model is not correct, he uses a linear model in all its “justifications”. This means that all the examples that he presents are not valid since they are based in a false premise.

General Comments

Here I will comment on two subjects that are relevant to @msa6867 analysis (or lack of it).

Overlook of the statistical analysis (main proof of non-linearity)

The section 4.3 contains a statistical analysis of the relation between sessions-by-node and relays-by-node. This section shows that these two variables are not linearly correlated. The linear correlation is required for PUP-21 fairness (and any other model/proof provided by Mark). This requirement of linearity is shown in section 4.5, which was also ignored this time without any comment. We suppose that this means that Mark acknowledges that the underlying model of PUP-21 requires the linear relationship between sessions-by-node and relays-by-node.

The section 4.3 is completely ignored with some weak comments:

This entire section is meaningless from a statistical analysis perspective. Particularly as relates to isolating anything having to do with PIP-22. I suggest doing similar studies on any other 2-month period of time (Eg. Jan-Mar 2022, or April-June 2022) to see how much loss of determinism happens naturally over the course of two months as providers upgrade systems, experience attrition, gain new customer base, etc, etc.

This is really concerning. Claiming that a statistical indicator of linearity is meaningless when trying to prove the fitness of a linear model is a very strong claim. This cannot be just said lightly and some proof must be given to prove that this is not correct.

The document at its core proves the non-linearity between two variables, the number of sessions-by-node and the number of relays-by-node. No matter the period of the analysis, the result will always be rejection of the linearity. The only chance to prove linearity is precisely in the analyzed period of time where one of the variables (the number of sessions-by-node) changed notably over other noise factors. Requesting an analysis in other period of time, where no large change in the observed variables is expected, is simply wrong or intentionally misleading.

Furthermore, one should not expect to see any correlation between the change of relays per session experienced by a provider (Equation 12 Vrc) and the QoS of the provider.

One should not expect such correlation but it exists (see figure 6 and table 5). The data and the calculations are published. On the other hand the document that you provided does not analyze this point to any extent. This is just a statement with no proof.

… Rather one would expect there to be a correlation between Vrc and the CHANGE of QoS of the provider over the time period in question. Providers that improved QoS – whether they started as good or bad QoS providers should see an increase in relays per session, while the opposite should occur for providers that did not improve QoS or deteriorated in QoS. E.g., a provider that moved from 500ms to 350ms latency would see a Vrc > 1 while a provider that slipped from 150ms to 200 ms would see a Vrc < 1. See Appendix 2 and 4 for further details.

This is expected and makes no point. Just proves a fundamental accepted concept, if you improve your QoS you increase your number of worked relays. This does not relates to the unfairness problem.

Changes in the landscape since the publication of this document

Some points raised by Mark refer to the current stabilization of the averages by bin and staked tokens/nodes. All these factors are outside the scope of the work and I wont analyze them. You cannot judge a work for events happening after its publication.

I want only to say that there were some major changes in the Pocket Network that should not be overlooked; the LeanPocket and the GeoMesh. These advances in the node client technology have dramatically changed the analysis of the network:

- The nodes are much cheaper. Scaling costs are negligible up to a large number of nodes.

- The limiting factor of cost is the associated chains, not the node itself.

- The QoS distribution changed dramatically (for good).

Due to these changes there are a lot of new strategies:

- Maximizing stake does not seem like the best strategy, PIP-22 becomes irrelevant for small node runners.

- The over-provision of Pocket nodes is not a good indicator of the costs of running nodes, the costs of the chains is a major factor now.

- The number of Pocket nodes is only important for the block size, the sell pressure for this factor is much lower than before.

These factors are the major reasons why we are not actively pushing this proposal. We want to analyze other options that might be better for the ecosystem and introduce less confusion in the community. Until we reach a decision we will hold this proposal.

This does not mean to any extent that the presented analysis is incorrect.

Appendix Responses

Appendix 1

This is just a recap of Mark’s presentation. Not pertinent to the document. Moreover, he said that this presentation had nothing to do with PUP-25, why is it now added to the review?

Appendix 2

The section starts with a false statement:

PoktScan postulated that, “This modification is required to …enable a stake compounding strategy that is beneficial for the quality of service of the network.” The implication is that the current PUP-21 compounding strategy is detrimental to the QoS of the network.”

This was not what we said. We know that the QoS improved and we said that in public forums and chats. This false statement seems to be a justification to add an analysis that is correct but irrelevant to the thesis of the presented document.

We only refer to the networks QoS as a whole in section 5.3. In this section we show that a non-linear stake weight can potentially improve the network QoS over the PUP-21 expected QoS. Surprisingly this section was not analyzed due to:

This violates the spirit and intention of PIP-22 and PUP-21 which is that in the current season, goal is to incentivize consolidation among as broad a swath of the community as possible, not to disincentivize consolidation.

The very same can be said from the whole document, why choose not to analyze this part?

The benefit in terms of QoS of reducing the number of nodes in the 60k bin are evident. Currently the average of the 60K bin is higher than the 15K bin, this means better QoS. If these N nodes in the 60K bin turned into 2 x N nodes in the 30K bin then the QoS rises.

Then it is stated that the change of P4 (a specific provider in the released data) affected the behavior of the network as a whole. While P4 is a big node runner, it holds only ~15% of the nodes of the network. It is unlikely that such node runner controls the behavior of all the other runners. Always entering in sessions with nodes from this provider is not as high as it seems. Moreover, the theory that many small node runners did the same (increase their QoS) at the same in the same period is not likely and very hard to prove.

There is a problem with the QoS changes statement in this appendix (that can be extended to the whole analysis), the changes in QoS do not affect (much) the linearity of a model, especially when this changes are somewhat linear, as seen in figure 2.1 of Mark’s appendix 2. While this is hard to explain in simple words it is obvious to people versed in the subject. If there is a linear relation between two variables and there is a change (of limited impact) at any given point in time or there is a linearly distributed change over time, the linearity remains strong.

This is an other reason on why we choose to use a statistical indicator (determination coefficient) over a handcrafted feature (the only one used in Marks analysis) to prove the absence of a linear relationship between the number of sessions by node and the number of relays by node.

The only way to observe a complete destruction of the linearity indicator due to QoS changes is that the changes in QoS were severe, occurred in a random fashion and distributed along the measured period also in a random fashion. This last requirement makes the argument of a massive change in QoS less likely.

Appendix 3

The appendix starts with a new calculation, called the “weighted” average. We will cover this in appendix 4, where the value is presented.

Now we will answer the different numerated statements in this section.

SECOND: The value (K_{fair} , algorithm 3) was chosen as a conservative reduction due to the uncertainty in its calculation and the negative impact in the community. We acknowledge the problem with the metric used to compute this reduction (the change of sessions by node to the change of relays by node), and thus we propose to use a more solid one to base our analysis (the determination coefficient). On the other hand you analysis is solely based on this metric that is known to be noisy.

THIRD: The issue that we rise is not related to stake bins, as we always said. This comment seems misleading. The justification of changing the linear PIP-22 to a non-linear one is to return gains to high-QoS nodes in the lower bin. All high-QoS nodes are gaining less, but only the ones in the lowest bin are earning less than before.

FOURTH: The K_{fair} calculation is not well defined. It should be based on the QoS distribution (in the network not the bins). This should be improved. Anyway it does not invalidates the need of a non-linear stake weight.

FIFTH: The need of changing the K_{fair} is only expected, as any other parameter. Optimal strategies always change, as in any dynamic system.

SIXTH: The shown curve is the expected behavior, as stated in section 5.3. It will be beneficial for the Pocket Network to change the stake distribution. In a non-linear stake weighting scenario, increasing the stake can only increase your gains up to a point, then you are forced to increase the QoS or accept to have a lower return per POKT invested.

Also, the cost of a node that is given by Mark (u$d 15) is completely arbitrary and far from reality (before and after LeanPocket). Analyzing costs of a Pocket Node is now irrelevant, having a single node or 100 nodes costs the same in terms of hardware for the Pocket Node.

SEVENTH : Once again the subject is mixed with the averages by stake bin, not the subject of the work.

The low-QoS nodes in the base bin will earn more with non-linear stake, yes, but also the low-QoS nodes in the high bin will earn less. If the token price pressure/inflation controls renders low-QoS nodes unprofitable they will start staking POKT to go the highest bin, in this scenario the non-linear staking will then punish low-QoS nodes… Relating stake bins to QoS is a repeated subject in Mark’s analysis. This argument makes no sense as the intention in the document is not to balance the rewards by stake bin for everyone, but only for high QoS nodes.

Appendix 4

This appendix reveals a fundamental (and troubling) flaw in Mark’s analysis.

Our document analyses the relays-by-node relation to sessions-by-node and its correlation to the QoS of the node runner. This means that we compare different groups of node runners.

To this end we gather information from 13 node runners, that after filtering, resulted in 10 for the Polygon chain (all calculation code is public, fell free to audit). So, we have samples from 10 different node runners. From these 10 subjects, 7 resulted in the high-QoS category and 3 in the low-QoS category. The resulting conclusions were drawn analyzing differences between these two groups.

The analysis provided by Mark ignores the QoS differentiation of the subjects and mixes all node runners into a single entity. This is a very basic sampling mistake. We are trying to achieve any conclusion over two different groups (low-QoS and high-QoS node runners in our case).

This problem can be clearly seen using the number of nodes by provider (using Mark’s average number of nodes):

- P1 : 145

- P2 : 255

- P4 : 2842

- P5 : 7693

- P6 : 919

- P8 : 986

- P9 : 457

- P11 : 217

- P12 : 1602

- P14 : 949

If we add all the node runners, the final sample will be an over-representation of P5, P4 and P12. Thus, this metric does not analyze how the different node runners behave, in fact, we can take out the node runners labels and simply calculate it using all the nodes in the network. What can we expect to see if we take the whole network? The same result, since Pocket Network is closed system, relays need to be somewhere and their number is independent of the number of nodes. This metric only proves that the sub-sample of node providers used for the analysis is accurate (thanks Mark).

The result of this metric is nothing but expected and they don’t shown anything but a trivial conclusion.

The numerous examples that follow in this appendix, all have the same problem that we have been discussing so far. The models provided by Mark (this analysis, PIP-22 and PUP-21) lack the required complexity to analyze the changes in the QoS of the network. They are all based on a linear modeling of the Cherry Picker behavior, he refuses to acknowledge that there is no proof that this is valid. There is no information in these examples. All their analysis focus on a macro view of the system that goes against a healthy and decentralized Pocket Network.

Appendix 5

The over-minting due to differences in QoS between the staked bins is irrelevant to the problematic being discussed. The subject was mentioned in 4.4 as an additional stress to high-QoS nodes in the first bin. The resolution (or not) of this particular problem is anecdotic. We have already commented on this in this thread.

Appendix 6

Once again, a linear modeling of the Cherry Picker effects, something that is not proved to be correct (in fact we proved otherwise).

Just a comment here, our original analysis of PIP-22 was criticized for including (among other things) additional stake entering the network. Now it seems that this is not a problem…

I will go over all your responses, and plan to continue the interaction, but am short on time this week. I have a window of time on Monday in which I will go through what I can but then am tied up the rest of that week as well. The week after that I will have more time.