I just hardcoded MinimumNumberOfProofs to 35 (with regard to proof submission only). As @RawthiL has shown, this will have minimal effect on the network as a whole, but it will eliminate negative value txs and reduce tx count by ~1%

I think that this is our least disruptive and most effective “bang for the buck” action. I would argue that the effects on decentralization are minimal because of the wide adoption of lean clients. Any given session no longer represents 25 unique targets as it did when we adopted that number.

I do not have data to back it up, but my guess is that an average session currently contains less than 20 physically different nodes.

This option doesn’t work for Gateways. Gateways rely on having node diversity from multiple stakes (ie, Gateways want to have hundreds of nodes available at any given time, this is trending is in the wrong direction). Node diversity is critical to provide the Quality of Service that Gateway customers require.

if this happened, gateways probably inadvertently create even more bloat, since they would want to achieve the same number of nodes per chain per session as they have today, thus resulting in staking more apps.

Shooting from the hip: Perhaps changing the minimum relays to post a claim could help with this? QoS probing isn’t going away anytime soon and future gateways will likely consume even more RPCs across more nodes per session. At the same rate, if there were even more relays on network, doesn’t this same issue continue to grow?

I will comment that i do not believe moving any sort of QoS or artificial metrics akin to QoS onchain is the correct answer and I believe it would be woefully premature. This type of measure can create artificial restrictions and second-order effects (gamification) where the incentives for QoS onchain directly compete with those of the gateways.

I recognize the complexity of this issue and am happy to contribute any way I can to devise a solution that is copacetic to all personas in the ecosystem.

I’ve added more data, its of special interest to see how the claims that are below each of the presented thresholds are divided by gateways. The Nodies gateway seem to be generating more low-relays claims. This is not an causation of any kind as the construction of this samples depends on a lot of things, like overall traffic. I also want to highlight that even if Nodies stops doing these relays, it wont change the long term issue that will keep on arising with each new gateway.

@JackALaing @Dermot et al

I wanted to reiterate the context, suggestions, next steps, backup plans, etc in my post above: Block Sizes, Claims and Proofs in the Multi-Gateway Era - #3 by Olshansky

To tl;dr next steps

- We need data from @poktblade w.r.t to nodies gateway-related questions. @RawthiL’s data above suggests this a necessary first step.

- In parallel to (1), we can start collecting similar data from Grove’s side.

- In parallel to (1) & (2), I can continue looking into

MinimumNumberOfProofswith @toshi. - We should only onboard new gateways once (1) and (2) are resolved, and the best practices are well-defined. This can be an iteration on top of the PR that’s currently in review: [Docs] AAT related comments & documentation improvements by Olshansk · Pull Request #1598 · pokt-network/pocket-core · GitHub

I understand that Gateways “want” certain things. And, I submit that their “wants” are exactly why we only allow vetted and trusted parties to operate as Gateways. The current ( V0) environment requires Gateways to act with care and to hold the overall best interest of the network above their personal goal maximization.

We do not currently know exactly how our “Trusted Gateways” are behaving and are forced to undertake research projects like this one (THANK YOU @RawthiL for helping us see) in order to keep the network running smoothly.

Changing minimum proofs per claim was the first mitigation strategy that was proposed and remains one of the top considerations. Unfortunately, we lack visibility into the actual number of test transactions that our “Trusted Gateways” are consuming which is making it difficult to figure out what an appropriate minimum number would be.

Thank you. Please confirm the exact number of test transactions per node per session which the Grove Gateway is producing and [if that number is variable] help us understand the circumstances under which it varies.

I wanted to consolidate a few different threads happening across different forums to make sure we’re on the same page.

- Urgency - This is very important but not ultra urgent. As long as we hold off on adding more gateways/appStakes, we should be okay for now.

1.1 @Dermot @b3n - It’s critical that we hold off on introducing more gateways & sharing app stakes until this is resolved.

1.2 @BenVan - I know we’re nearing the block size limit but would you agree with the level of urgency assuming (1.1)?

- Data Collection

2.1 @RawthiL thanks again for everything!

2.2 I’ll keep looking for any data we can identify within Grove, but no major changes (that would affect this) to app stakes have been made in recent past.

2.3 @poktblade I wanted to reiterate my asks above. Specifically, could you share:

2.3.1 App Stakes nodies has: (address, chain, stake)

2.3.2 This would help us understand if there’s an opportunity to consolidate app stakes on a per chain basis. Again, this won’t be necessary in Shannon, but we have to work around the limitations today.

2.4 @BenVan Do you mind sharing any other requests for data you might have?

- Future Best Practices

It is outside the scope of this discussion, but we’ll provide more details on (2.3.2) for future Morse Gateways.

The tl;dr for now is: instead of having N apps staked for chain_id, have 1.

cc @JackALaing

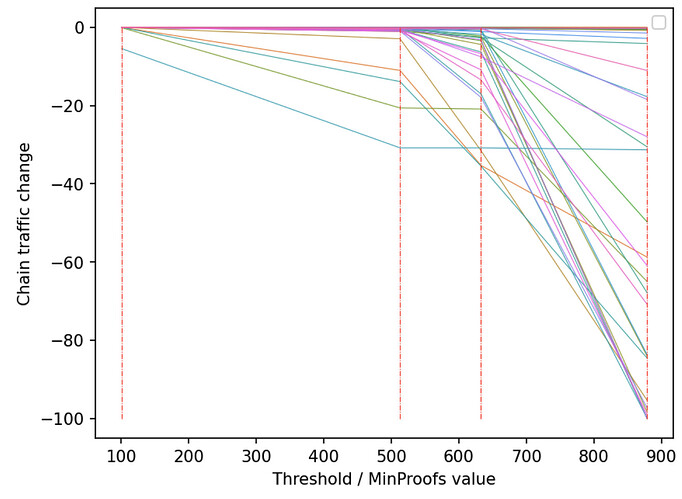

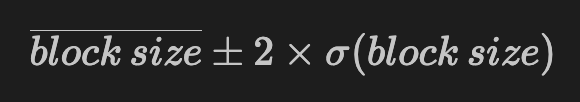

More information on what will happen if we increase the pocketcore/MinimumNumberOfProofs to achieve the reduction in this table:

| Percentile (~size reduction) | pocketcore/MinimumNumberOfProofs (Threshold) |

|---|---|

| 1 % | 101 |

| 10 % | 513 |

| 25 % | 633 |

| 50 % | 879 |

First the overall network traffic will be reduced as:

| Network traffic change | pocketcore/MinimumNumberOfProofs (Threshold) |

|---|---|

| -0.05 % | 101 |

| -1.79 % | 513 |

| -8.19 % | 633 |

| -21.35 % | 879 |

But the effect will be more important on a per-chain basis, this can be seen here:

There are many chains that will loose +25% of their traffic even if we only reduce 10% of block size, by setting a threshold at 513 relays per claim. These are small chains. For completion you can see the full list of chains that will loose a significant amount of relays in the table below:

Full Table

| Size Reduction | Threshold | Traffic reduction higher than | affected chains |

|---|---|---|---|

| 1 % | 101 | 10% | No chain |

| 25% | No chain | ||

| 35% | No chain | ||

| 50% | No chain | ||

| 10 % | 513 | 10% | [‘0005’, ‘0027’, ‘0053’, ‘0056’] |

| 25% | [‘0056’] | ||

| 35% | No chain | ||

| 50% | No chain | ||

| 25% | 633 | 10% | [‘0005’, ‘000F’, ‘0027’, ‘0053’, ‘0054’, ‘0056’, ‘0070’, ‘0077’, ‘0079’] |

| 25% | [‘0005’, ‘000F’, ‘0053’, ‘0056’] | ||

| 35% | [‘0005’, ‘0053’] | ||

| 50% | No chain | ||

| 50% | 879 | 10% | A lot… |

| 25% | A lot… | ||

| 35% | [‘0005’, ‘000F’, ‘0022’, ‘0026’, ‘0027’, ‘0028’, ‘0044’, ‘0049’, ‘0051’, ‘0053’, ‘0054’, ‘0063’, ‘0070’, ‘0072’, ‘0076’, ‘0077’, ‘0079’] | ||

| 50% | [‘0005’, ‘000F’, ‘0022’, ‘0026’, ‘0027’, ‘0028’, ‘0049’, ‘0051’, ‘0053’, ‘0054’, ‘0063’, ‘0070’, ‘0072’, ‘0076’, ‘0077’, ‘0079’] |

Given the effect that this have on per-chain relays, I think it is not realistic to think that we can free up more than 25% of block space without affecting the ecosystem (using this method).

If we intend to fit more than one additional gateway, we will probably need to change more than a single parameter.

Hey everyone, I created a Block Size War Room in Discord. Obviously the forum is good for long forum discussions, but for coordinating and quick info gathering, Discord can be useful.

Feel free to utilize it ![]()

Grove gateway has permission to invoke over 1000 gigastakes (exact number I can confirm with the foundation), but we actively invoke ~261 of them.

For each app stake, there are 24 nodes per stake per session, up to 15 chains (with 1 session per chain) per stake and 261 (active) appstakes. The prior imputes that we are currently able to invoke 93,960 total nodes in session and 3915 active sessions at any given time.

Even if we were to reduce the total nodes per session per chain to 5, you achieve a 75% decrease (19,575) of nodes in session, but you do not reduce the number of sessions. If you reduce the number of chains per app to 5, then you achieve a 50% decrease of nodes in session (31,320) and the number of sessions 66% (1305 active sessions). This also reduces the number of top quality nodes that have an opportunity to produce outsized rewards. To that point, Gateways require node diversity in session to provide QoS that end users need and are willing to engage with. The logical conclusion from a Gateway’s perspective is then to up the number of appstakes they actively invoke to the limit (~1000) to make up the difference, resulting in the same outcome (and possibly worse!).

I understand the nuance in increasing the blocksize (and how it affects scale) as well as the implications of a consensus-breaking change this late in the lifecycle of Morse, but I would advocate to double the blocksize (again) to try and get the existing ecosystem until Shannon (when this whole conversation becomes moot). In addition, I would recommend Suppliers lowering the minimum relays per claim. While I understand this is a “tax” on Suppliers, I believe it is a tradeoff to scale for the time being.

I believe this solution paves the way for the 3rd+ gateway to come onchain in Morse and hopefully brings an increase in relays to build momentum as we prepare to launch Shannon. Eager to see if other creative solutions pop up to this complex problem. (also pls check my arithmetic and parameter values above ![]() )

)

tl;dr My personal recommendation on behalf of Grove, and as a public representative of the Pocket Network ecosystem, is to:

- Double the

BlockSizefrom 8MB to 16MB, spending ~ 1 month at 12MB in between - Increase

MinimumNumberOfProofsfrom 10 to 500

The following is an opinionated and intentionally simple tradeoff table I’ve put together with @fredt

& @shane to help drive to a solution.

All of these are simply a governance transaction that can be executed by the foundation if approved by the DAO w/o any client or consensus-breaking changes.

Note: I recommend clicking the expand button in the top right corner so the table is readable.

| On-Chain Parameter | Pros | Cons | Nuances | Additional Context | |

|---|---|---|---|---|---|

| Block Size | pocketcore/BlockByteSize |

Enables the ability to handle more on-chain Sessions, enabling more Gateways & more Chains | Costs for every supplier (i.e. node runner) will increase | Introduces overhead to communicate new resource requirements | 20MB blocks were tested on TestNet when the upgrade from 4MB to 8MB took places |

| # of Relays Per Session | pocketcore/MinimumNumberOfProofs |

Reduces the number of on-chain Sessions, enabling more Gateways & more Chains | Rewards and relays for low-volume chains decrease | Could affect tokenomics if the number becomes too high. | See the amazing data & analysis from @Ramiro Rodríguez Colmeiro above |

| # Nodes Per Session | pocketcore/SessionNodeCount |

skipped | skipped | Out of scope given the requirements of this discussion since it impacts one or more of QoS, decentralization & tokenomics | A new forum thread should be started for this discussion. |

| Max Chains | pocketCore/MaximumChains |

skipped | skipped | Out of scope given the requirements of this discussion since it impacts one or more of QoS, decentralization & tokenomics | A new forum thread should be started for this discussion. |

For additional details related to how many applications Grove’s gateway is handling, I have used the query below to collect the number of relays handled since March 1st showing the 216 app stakes actively being used by Grove’s Gateway. The full csv is available here.

SELECT

protocol_app_public_key,

CONCAT('[', STRING_AGG(DISTINCT chain_id, ', '), ']') AS list_of_chain_ids,

COUNT(*) AS total_relays

FROM

`portal-gb-prod.DWH._prod`

WHERE

TIMESTAMP_TRUNC(ts, DAY) >= TIMESTAMP("2024-03-01")

AND protocol_app_public_key IS NOT NULL

AND TRIM(protocol_app_public_key) <> ""

GROUP BY

protocol_app_public_key

ORDER BY

total_relays DESC;

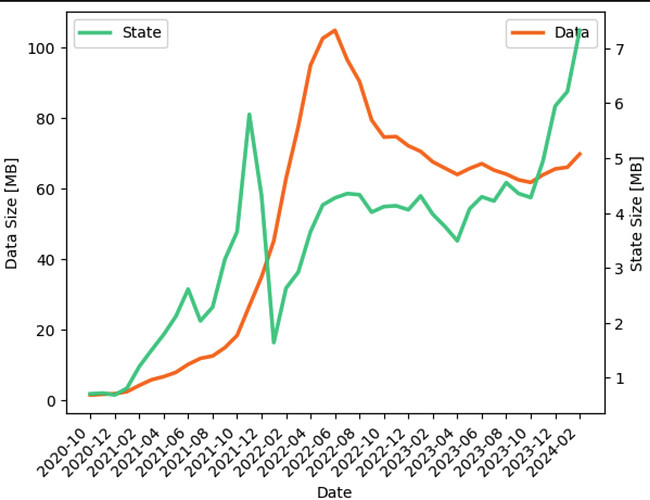

I advocate for increasing the block space until Shannon is complete, as long as consensus can keep up. The majority of node operators utilize GeoMesh and typically have one or two master nodes at any given time. Most of them should be using pruned options, so the impact on cost and storage increase should be minimal. We should monitor the P2P IO usage of the network, block times, and the actual increase in storage for node operators as we expand the block space. I’ve requested Poktscan to chart the data directory over time as the state size increased, and it seems to show minimal impact.

Economically speaking, I believe node operators should be open to increasing block space, as that has a strong correlation to more potential relays from gateway operators.

Given that POKT doesn’t have to operate with extremely low block times, this could be the option with the lowest friction without requiring gateway operators to change their QoS strategies. If the network allows gateways to send relays to ‘n’ nodes, then we should permit them to do so. Even if gateways attempt to optimize fully with respect to block space, as @fredt mentions, this will decrease potential QoS checks and the ability for gateways to evenly distribute relays in an altruistic fashion. Reducing ‘SessionNodeCount’ or ‘MaximumChains’ doesn’t seem very fruitful; all this does is shift the problem to the gateway operator, who will likely just request more app stakes. Over time, the same number of sessions and claims will occupy more block space anyway.

Finally, even if we are able to optimize on the number of claims submitted on the network, this likely won’t be fruitful for the scale that the Foundation is shooting for, which is an additional 2 or more gateway operators in the network. The result of decreasing the aforementioned two parameters will not buy enough block space for these gateway operators.

@poktblade Do you mind sharing similar information for nodies as I did above for Grove? I’ve been told it’s also available on poktscan so would appreciate your help to add this level of transparency & communication with the ecosystem.

I’ve requested Poktscan to chart the data directory over time as the state size increased, and it seems to show minimal impact.

@RawthiL Is this something your team would add?

Please ![]() this comment if the recommendation seems sensible to the readers. I will move this to an official proposal early next week if there are no objections.

this comment if the recommendation seems sensible to the readers. I will move this to an official proposal early next week if there are no objections.

My conversation with @poktblade was around the same subjects that we already talked here. We even were around the same mind of increasing block size and min proofs. Not sure why he did not posted earlier.

The graph:

is just another way to represent the same bar plot of block size, with the added data size. But since the latter was not that important to this discussion (it does not scale with claim/proofs as the state), I left it out.

We also analyzed app usage and claim frequency, the difference between gateways was interesting but irrelevant for this topic, as we cannot force gateways into specific strategies. Both @poktblade and @fredt agree on this I think. Consequently I left that graph out also.

Thanks @RawthiL and @BenVan for kickstarting this conversation

And for all the input and leadership from @Olshansky @shane @poktblade and @fredt on the forum and behind the scenes

It looks like we will soon be able to onboard new gateways pre-Shannon. Four additional gateways (including Liquify who are now waiting for app stakes) are in the final onboarding stages, so I hope everyone can continue to rally around this effort and get it over the line ASAP. It’s really appreciated. ![]()

In the meantime, all those interested in how app stakes are best managed, please see here (if you haven’t already) to comment on any best practice instructions you believe new gateways should follow to get the best results in terms of QoS and network health.

Oh wow, four additional gateways? Is a 16MB block size going to be sufficient to support that? Or are we just going to cross that bridge when we get to it?

A couple of questions that Poktscan may have that will help with cost of claims/proofs by sampling the various claims in the network historically

- Does MaxRelays per single app affect claim size?

- number of relays within a claim

- Any other factors that may affect the actual bytes of the claim tx?

I think answering these questions will help us plan how much to increase block size for each GW operator. (AFAIK the size is mostly fixed (as per our discord convo) but we need to figure out the amoritized cost of the txs to do proper capacity planning

Thanks

Given our conversation in private I think that the actual question here is:

How do we calculate the impact of new gateways in the block size?

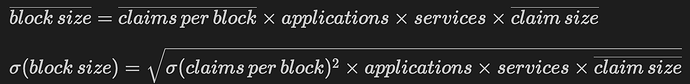

Well, good news, its very simple. I will dump values here and then justify them later.

The block size used by a new gateway, with ~96% confidence is given by:

with:

where:

mean claim size = 2.4944 KB(obtained empirically, 1% error)mean claims per block = 5std claims per block = 2.1044

To illustrate this we will use “Nodies”, a gateway using a round-robin strategy with their apps (worst case scenario). Nodies has applications = 20 and services = 8 per application, then, the formula is filled as:

mean block size = 5 * 20 * 8 * 2.4944 KB = 1995.54 KB = 1.94877 MB

std block size = sqrt(2.1044**2 * 20 * 8 * 2.4944 KB) = 42.04 KB = 0.041 MB

Expected block size of “Nodies” with 96% confidence:

1.948 MB +- 0.082 MB

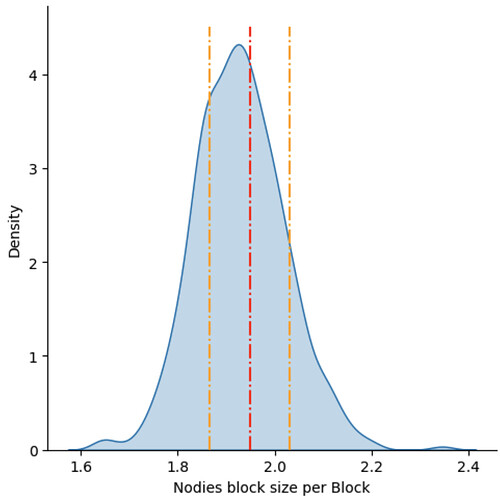

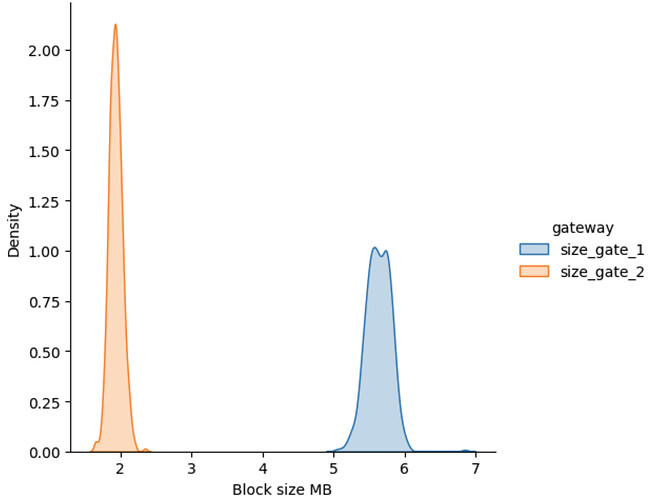

Lets contrast with data:

The distribution is the block size imputed to “Nodies” claims+proofs in the last 500 blocks that were analyzed in this thread. The red line is the average, the orange ones the expected 96% confidence interval.

Not bad if you ask me…

So, by using the provided equations we can know beforehand and in the worst case how much block size we will be needing to accommodate the new gateways. The foundation will need to do the math as the number of apps and services will depend on the contracts being signed.

Now, if you want the full justification, follow me…

Dataland!

Assumptions

The first step to analyze the impact of the gateways in block size is to assume that the only component that affects the block size is the number of claim+proofs. This is not exactly true, since txs also impact block size and there is a minimum size of around 400KB. However, as we shown before, the effects of txs is minimal and the effect of the 400KB minimum is diluted as the block size grows. This means that this assumption is not heavy and in the end we are only being pessimistic, which is good in this context.

So, from now own, the total size of the block is directly proportional to the number of claim and proofs observed. Also, the number of relays being claimed plays no role.

Imputing Block Size

The first thing that we need is to analyze how the current gateways use the block space. Using the sample of 500 blocks, we plot the distribution of the block size imputed to each gateway in each block:

As expected the gateway 1 ( “size_gate_1” ) dominates the block size, this is “Grove”, having ~70% of the block. We also note something very important, the distribution of “Grove” is not perfectly normal, it has some degree of bi-modality, while the “Nodies” distribution is much more normal.

Comparing Gateways

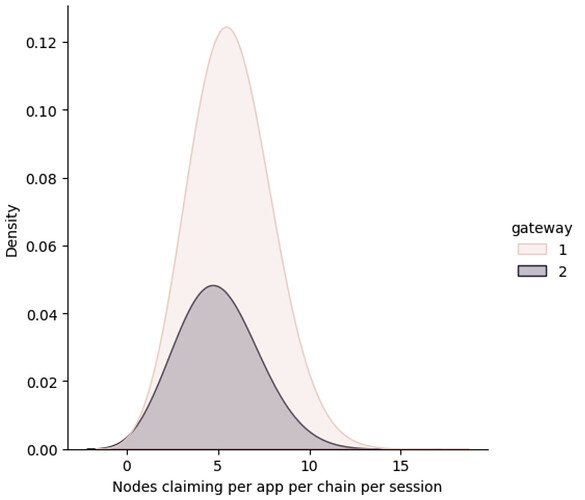

We need to know the cause of this difference in distributions. So the first thing that we can analyze is how many claims each gateway generates each time one of their apps has a session. So we plot that distributions:

We can see that both distributions are really near, which means that there is no explanation here for the observed difference. Both gateways have almost the same average of producing 5 claims ± 2 claims per session. Which makes sense, they use almost all nodes in a session most of the time that they decide to use an app. The numbers check out as only 1/4 of the nodes in a session claims at each block so, 5*4 = 20, add the dispersion to that and we are near the 24 nodes per session.

So, both gateways always trigger the same number of claims+proofs per session, the question is now, do gateways always use their sessions?

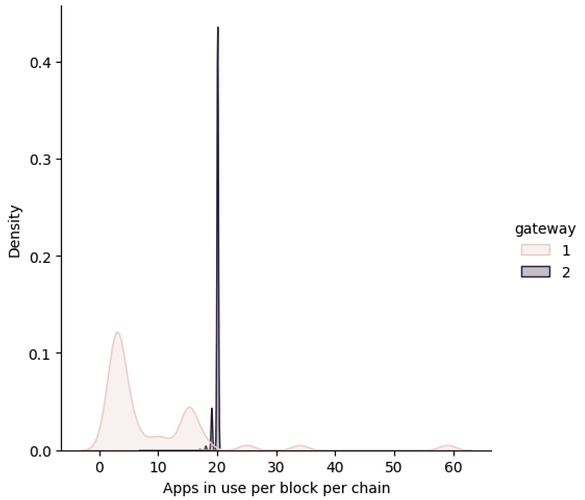

To answer that we will plot the distribution of the number of apps that are being used at every block (which correlates to session in modulo 4).

Voilà! We can see that “Nodies”, gateway 2, almost always uses the session that their 20 apps produce, while “Grove”, gateway 1, is using a different strategy. The strategy used by “Nodies” maximizes the number of claims+proofs produced and also is very stable, we believe that they do a sort of “round robin”, using all their stakes for every chain at every time.

Knowing that “Nodies” is so stable is very important to contrast the model being developed, on the other hand, the strategy of “Grove” creates complex block size distributions that would require to go much deeper into the data. So, this is our lucky day.

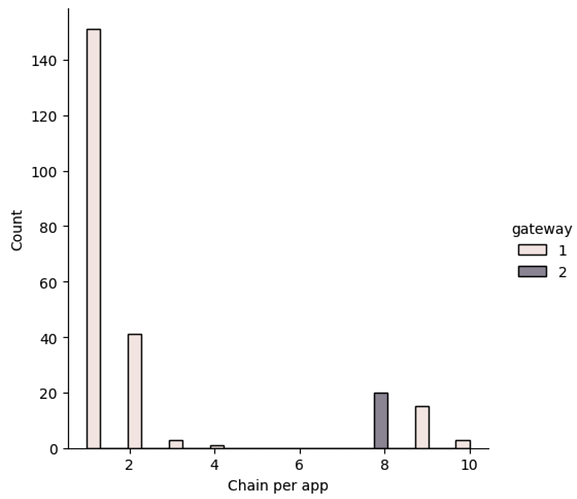

A final, but also important part of this experiment is to test that “Nodies” is always using all their chains. So, we plot that distribution too:

Bingo, “Nodies” has a singleton distribution, they always claim for 8 services, while “Grove” has a more distributed strategy.

The Model

The worst case scenario for block usage is that a gateway uses all their applications, for every service, at every session and triggers claims for each node that is paired with them.

As we have seen, “Nodies” is doing this, they have 20 apps, which appear at almost every block (they are always used) and when they appear they trigger claims for 8 chains (all their chains) and for almost every node in the session (5±2 node claim per block ~> 24 nodes every 4 blocks). Thank you “Nodies”? (I have mixed feelings hahahaha)

The case is that using a simple measure of the imputed block size to “Nodies”, provide us with a very stable measure of the size of a claim, approx 2.5 KB with 1% dispersion, and more importantly allows to check our model that says:

“The maximum number of claims+proofs observed in a block coming from a gateway is equal to the number of apps multiplied by the number of chains that the application serves, multiplied by the number of nodes in the session divided by the number of blocks per session”

Mathematically:

Claims+Proofs = Applications * Services * Nodes per session / 4

then:

Block size = (Claims+Proofs) * size of tx

The proof of this was shown at the beginning of this post, where the “Nodies” inputted block size has a high overlap with the expected size using this simple (worst case scenario) model.

Hi Ben,

Apologies for the delayed response here. The majority of gateway operators are now operating on the default Gateway server that we have developed and is fully open source and well documented. This allows for you and any one else to audit how gateways are operating in a transparent fashion and allows for extensibility, third party integration, and predictability.

While gateway operators can still modify and deploy their own fork, analytical tools such as Poktscan can use the base implementation as a baseline to determine if others are deviating as well with their own fork (not implying this is a bad thing). Poktscan has already started exposing some of the data we expose in the gateway server, i.e https://poktscan.com/explore?tab=gateways