I agree with that. I offer this as a change that does not require significant overhead or friction. Additional incentivization of validators would likely require a MaxValidator increase anyway to avoid a singular entities from taking control, since now you’re incentivizing them to increase their stakes and fill the top 1k slots.

I understand this concern and did not intend for this proposal to have this affec; however, this isn’t a complicated issue. Do not give a single entity 51%/66% of your mining/voting power.

I am a transparent and candid individual so I state things how they are, admittedly, sometimes this is a fault.

+++

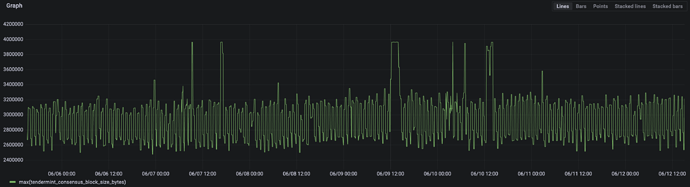

Prior to moving to 1k validators, we had ~15k validators and the chain functioned. Also as mentioned in my post the additional chiain bloat from having extra validators is insignificant. I could be wrong here but the core team can comment on blocksizes as I’m not sure where to find that information.

Yes, I do think it would be optimal to slowly raise the validator count over a period of a few days.

The issue with having only 1k validators is that it would take tremendous incentivization in order to create a safe validator set due to the increased probability of session selection by scaling horizontally. Right now, there isn’t an honest economic incentive to taking control of the validator set since the rewards are so low; however, if the block proposer reward were to be increased, it would be an economically viable strategy to try and take all of the validator slots. This is not a secure solution. Even with 1k validators, its extremely easy for a singular provider or entity to take control of all the slots, even with other competition. Increasing the block proposer reward further incentivizes a single entity to take control.

The problem with increasing the stake size is the amount of friction that this causes for people. They would have to unstake and wait 21 days before being able to consolidate. If we were to offer instant unstaking, this would require signficiant coordination.

This exists currently

In summary,

I recognize the flaws with how this issue was prevented and I plan on being significantly mindful moving forward. I do think though that regardless of the disclosure, we should stay focused on finding a solution and fixing this issue.

I don’t think that increasing the proposer allocation is a catchall solution, nor is just increasing the maxvalidators. Both should probably happen in tandem in order to create a safe validator set. Max validators is just easier to increase since it does not involve a complicated economic discussion and leads to significant bolstering of economic security. One would need ~50m pokt to take control of a 5k validator set. This is a multi-faceted problem, and this is one of many potential solutions. I find it to be the smoothest, and something that we will likely need to do anyway after discussions of increasing the blockproposer reward.