You are totally right. A bounty program as part of the process makes a lot of sense.

Great proposal. I agree with the points outlined and think this is a change we should make.

On the community side of things, I think we should end these behind the door discussions as they are detrimental to the collaborative & decentralized nature we are trying to build. We need not only the collaboration of the core team but vice versa the core team can benefit to collaborate with community members. How else can talented engineers begin to break into the space and offer their skill set if we’re constantly having secret talks behind the door with only a select few? This type of behavior is completely unacceptable and we should break that precedent if we are to mature and evolve… The OP has outlined the issue, proposed a GOOD solution, and now it’s time to discuss and vote. What other process could you possibly want?

And if we are to follow a different process, will the information be made public? Will this purely be private submissions to a centralized team? How will other people outside of that space know about the problem and offer their knowledge?

I think the process of

identify issue → if you have a solution, post it or collab with other people to come up with a solution then post → let everyone discuss → vote

is exactly what we want and what was done here.

I’m in two minds about this proposal and that is not because I run Liquify ![]() . I for one and shocked at the numbers and surprised more runners aren’t above the 1000 threshold.

. I for one and shocked at the numbers and surprised more runners aren’t above the 1000 threshold.

5xing the count will add significant network bloat and added load on validators, Personally we have seen that running a node over the threshold has significant increased load on the node compared to a standard relayer node this load will only increase with a larger count, along with being more wide spread with a larger validator set.

I also totally agree with what @shane said, this shouldn’t be public on an open forum.

Maybe we can have a compromise and have a 2-2.5x increase and a means to reduce stake I’m happy to back down some validator seats (but means to lower stake probably has knock on affects elsewhere?). but this may mitigate the issue until v1 is ready…

This should 2x the price to attack and add further decentralization.

Or we increase the threshold over time, really worried 5xing it out the blue will have large implications

Good points.

Do you have any data that you could share to give everyone a better idea how much increased load a validator takes?

Thanks for the proposal @addison

Very valid concerns.

I agree with the proposal to increase network security by a material amount.

However, rather than increasing the validator set size, I agree with the comments that instead propose increasing the rewards to validators and letting the market dictate the minimum stake for validators.

Saying this, it would be best IMO to only do so in the knowledge that the minimum stake per node is sufficiently high already. For example, 15k POKT per node was always a very low figure. Now, I think it’s drastically too low given the importance of the network and the network overhead caused by incentivising nodes to scale horizontally. So why not choose a number 10x more per node stake? Or something in between, eg 100k pokt per node?

My ideal proposal would be as follows:

- increase minimum stake per Pocket node to 100k pokt

- increase validator share of relay revenue to 5% (this number is somewhat arbitrary, but we should aim to incentivise the most technically minded infrastructure providers in the network to participate as validators)

- consider - in light of the above - whether it is still beneficial to increase the validator set to a number > 1,000. Perhaps we need a community call or another forum to discuss this point?

- in an ideal world, implement an “edit stake” function to allow all node runners to increase their stake without having to unstake first. Pocket’s economics are complex, and I expect that this won’t be the last time that the minimum POKT per node is changed, so having a straightforward method to edit stake would be valuable IMO.

- provide all node infrastructure providers sufficient time - 6-8 weeks? - to coordinate with their customers about 1) the impact of increasing the minimum amount staked per node and 2) the game theory around optimising stake per node to be in the validator set of X validators

Keen to hear everyone’s thoughts

I agree with that. I offer this as a change that does not require significant overhead or friction. Additional incentivization of validators would likely require a MaxValidator increase anyway to avoid a singular entities from taking control, since now you’re incentivizing them to increase their stakes and fill the top 1k slots.

I understand this concern and did not intend for this proposal to have this affec; however, this isn’t a complicated issue. Do not give a single entity 51%/66% of your mining/voting power.

I am a transparent and candid individual so I state things how they are, admittedly, sometimes this is a fault. ![]()

+++

Prior to moving to 1k validators, we had ~15k validators and the chain functioned. Also as mentioned in my post the additional chiain bloat from having extra validators is insignificant. I could be wrong here but the core team can comment on blocksizes as I’m not sure where to find that information.

Yes, I do think it would be optimal to slowly raise the validator count over a period of a few days.

The issue with having only 1k validators is that it would take tremendous incentivization in order to create a safe validator set due to the increased probability of session selection by scaling horizontally. Right now, there isn’t an honest economic incentive to taking control of the validator set since the rewards are so low; however, if the block proposer reward were to be increased, it would be an economically viable strategy to try and take all of the validator slots. This is not a secure solution. Even with 1k validators, its extremely easy for a singular provider or entity to take control of all the slots, even with other competition. Increasing the block proposer reward further incentivizes a single entity to take control.

The problem with increasing the stake size is the amount of friction that this causes for people. They would have to unstake and wait 21 days before being able to consolidate. If we were to offer instant unstaking, this would require signficiant coordination.

This exists currently ![]()

In summary,

I recognize the flaws with how this issue was prevented and I plan on being significantly mindful moving forward. I do think though that regardless of the disclosure, we should stay focused on finding a solution and fixing this issue.

I don’t think that increasing the proposer allocation is a catchall solution, nor is just increasing the maxvalidators. Both should probably happen in tandem in order to create a safe validator set. Max validators is just easier to increase since it does not involve a complicated economic discussion and leads to significant bolstering of economic security. One would need ~50m pokt to take control of a 5k validator set. This is a multi-faceted problem, and this is one of many potential solutions. I find it to be the smoothest, and something that we will likely need to do anyway after discussions of increasing the blockproposer reward.

I discussed this proposal with the core devs and their main concerns were the possibility of nodes falling into the top 5k who are optimized for servicing and thus running non-validator-friendly setups.

Therefore it’s recommended that we do a phased implementation, with advanced notice in each phase to the node runners who would become validators when the parameter changes.

I would suggest that we increase by 50% each time, since 67% is the validator power required to keep the chain moving. This would make the increments: 1500, 2250, 3375, 5000

If this proposal passes, I would suggest that the Foundation can coordinate these phased parameter changes and take as much time as needed to communicate to the validators and confirm that consensus isn’t affected with each change.

If you agree @addison, please add this to the proposal text under an Implementation section.

I also support the idea of having a phased approach with advanced notice in each phase, a fantastic idea to minimize impact.

I am ready to vote on this proposal as soon as possible. This proposal is the lowest risk to fast track while still improving the security of the network by magnitudes.

tl;dr I support this proposal provided @JackALaing’s phased approach but was wondering if @addison or others could collect additional stats on the recent block size distribution.

Potential Cons

In addition to what Jack mentioned w.r.t. Node Runners, the issues I personally see (which have mostly been covered by others) are:

- Network Congestion

- Block Bloat

Network Congestion

I haven’t delved deep or benchmarked Tendermint’s gossip, but in 2018, it was determined to have cubic complexity. [1] Even with the refactor [2] that they completed in Q3 2021 [3], I haven’t been able to find benchmarks to show what the new gossip complexity is.

With that being said, since the range we’re dealing with is quite small (1000-5000), I don’t think we need a deep investigation into the benchmarking difference as long as we keep an eye on the network during the phased increased approach.

Block Bloat

Since Tendermint doesn’t do any sort of signature aggregation, but simply collects them into a list, I’m less worried about the chain bloat on disk for a single node, but moreso the proportion of space taken up in a single block and the size of the messages being sent around.

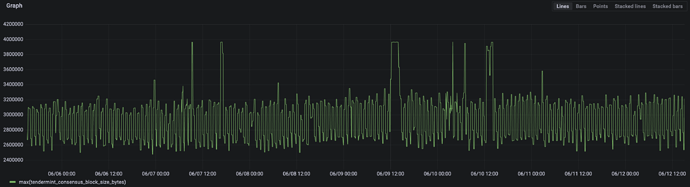

@addison / @pierre / @poktblade Given that the max block size is 4MB, have any of you looked at the distribution of the block sizes recently to see how close we’d be to the limit?

Pros - Network Security

In addition to this, it’s worth noting that some of the other ongoing economic proposals may result in a reduction of this value back to 1,000 (or somewhere in that range), but still require more discussion and evaluation. For that reason, this is a quick and simple approach to increasing the network’s security.

[1] https://arxiv.org/pdf/1809.09858.pdf

[2] tendermint/adr-062-p2p-architecture.md at 7172862786cabaeb8ac06a6d646955a2faa6da31 · tendermint/tendermint · GitHub

[3] Tendermint Roadmap | Tendermint Core

Thanks Jack for the insight and for looking into this. I definitely think we should do that

I will add it.

No. I would need some insight from v0 team to figure out how to do this. I will get that from v0-contributers ![]()

I was planning on waiting to see what happens with GV etc before putting this to a vote. I think we will need to increase the parameter in any case, but I think we should see what happens first before determining what value we pick. I am now thinking though that we should just increase it to something in the interim to increase security and reevaluate after other proposals are passed.

Maybe we can increase the validator count by 4k in the next 4-6 weeks to increase our security while giving us optionality in the midst of GV etc?

Thoughts? @Olshansky @pierre @poktblade @JackALaing

Appreciate the research and thoughtful response Daniel.

Here’s a metric from live nodes, this graph covers last 7 days. Seem like we’ve hit the limit a few times.

IMO it doesn’t make sense to vote on this proposal until there has been a chance to see what the validator stake changes to with the implementation of PUP-19

If it doesn’t strain the chain, it doesn’t hurt to have extra consensus security as well as expanding the number of nodes that can participate in the new 5% validator rewards.

Here’s the vote link btw: Snapshot

The vote was rescinded by Addison to allow for more consensus building on a parameter value.

This is not the right time for this type of change.

The 1,000 validator limit was put into place as part of the V0 guardrails.

Those guardrails are why the network is still functioning.

There has been no evidence presented on this thread (or anywhere that I’ve seen) which would support the idea that a 5 x increase in successful p2p block signing and a 5 x increase in the number of signatures per block won’t “strain the chain”. We’re talking about adding 4,000 signatures to every block!

IMO: This is reckless and should be voted down at this time. Then - after some research and some time for pup-19 and pip-22 to get absorbed - revisited and perhaps implemented in a slowly scaling manor. EG: 1.25 x