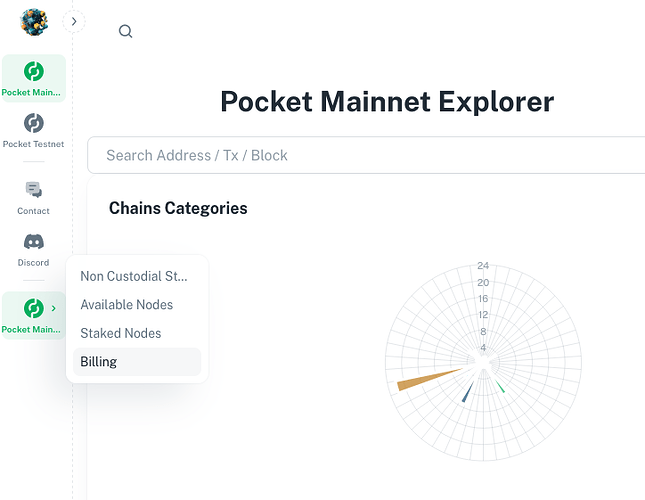

Socket Overview

This socket is initiated by cryptonode.tools to construct and improve, deploy, and maintain a blockchain indexer and explorer for the POKT network. With the recent decline in reliable indexers, there’s an urgent need for multiple reliable explorers to be available and accurate at all times.* While the primary and only remaining active explorer is a great product, there have been instances of inconsistencies in presented data. When that happens, even if rare, it sparks fear among clients and users about the health of the ecosystem and the status of their assets. Furthermore, every service in a decentralized ecosystem needs resilience, and many users prefer to have an alternative as a sanity check even when the main option is perfect.

Key Highlights and Challenges:

- Complexity: Building and maintaining an explorer isn’t just about coding and open sourcing a repo for others to download or deploy, which takes it’s own time and effort. It’s also about ensuring uptime for multiple services, handling many concurrent requests during indexing (unless you want it to take weeks or months), responsive for frontend users (frontend to backend to db latency and concurrency), as well as verifying data accuracy. The infrastructure cost alone might not be insignificant, and multiple components to the stack to consider (a responsive website, backend api, multiple replicated database measured in terabytes, likely a caching layer, healthy archival nodes to sync from, monitoring and logging, etc). I’m still an indie node runner and don’t outsource anything, and each component requires attention, energy, time to do well.

- Stack & Architecture: My solution will utilize

nestjsfor the backend indexer scripts and logging etc, possibly build an api as well if notpostgreston top of thepostgresdb, and data using pokt rpc api from my own pokt nodes.** My plan is to initially replicate pokt.watchsveltefrontend usingnextjsandmuias part of my cryptonode.tools website, with the backend built in nestjs/typescript with improvements for logging, error handling, and data verification using the simple poktwatch python indexer as a reference.*** - Self-hosted Backend API: Hosting full archival chains and multiple large databases for indexing is expensive and infeasible with cloud hosting if I hope to maintain it long term cost effectively, and so multi region logical replication on bare metal is going to be fun

- Selective Proprietariness: While I believe in community and collaboration, I am still just an individual; certain components re deployment and the frontend website, especially those critical for performance, security, and multi-region deployment, will remain proprietary.

Type of Socket:

Initiative: $3K, recurring/open, reviewed monthly. The costs aren’t just about development; they also cover infrastructure, data hosting, maintenance, and continuous improvements.

Commitment to Transparency:

I commit to regular updates and will self-report my progress monthly. The backend NestJS indexer service will be open source.

Link to Work:

Wallet:

5f2a1121cd54c25f2622b5e936e9e0159c6a48d5

* Thunderhead shut down support of pokt.watch but left the site running so some people still used it despite missing transactions and bad values, some might not know for a while. PNI/grove also scuttled the main pokt.explorer to save costs and release responsibility, leaving the ecosystem vulnerable in the meantime.

** pypokt is broken and just a dependency trap and feels like a waste of time to fix imo, is just a wrapper around the pokt rpc api anyways. Same with pocketjs, though it’s mostly usable, it still has bugs left in it forever, I know people know about it and just work around it. I started building my indexer via the pokt rpc api instead of using pocketjs or pypokt for this reason, and directly with my own nodes as using portal rpc exceeds free tier quickly, aside from other challenges using it for indexing

*** You may be familiar with public pokt watch indexer repos from th and some forks, thinking I can just copy pasta that. Neither are usable outright, like some other repos in poktland, the public stuff gets left broken or buggy and not ready for production, which slows down anyone trying to use it, and stuff like that is a HUGE time sink for someone like me, but instead for me serve as a great tool to use as a reference to build my own version, allowing me to make improvements along the way.