Thanks @Andy-Liquify , this seems to be a very elegant solution! If I’m understanding correctly, node runners will be incentivised to consolidate stake up to 75k (configurable via vote) to lower operating cost. Minimum stake is not changing, so smaller node runners are not negatively affected other than being at an operating cost disadvantage due to being rewarded a lower reward rate. I think everyone understands there are economies of scale, and I think the benefits far, far outweigh any disadvantages.

As a follow up to potentially address the cost disadvantage smaller node runners could face, the ValidatorStakeWeightMultiplier value could be set at a value lower than 5. It could be set between 4 and 4.6 as an example. This would make rewards not scale linearly with stake amount and account in some fashion for the fact that nodes consolidated to less than ServicerStakeWeightCeiling are at a cost disadvantage by making rewards not scale linearly with stake amount. It would level the playing field somewhat.

Market conditions vary a lot, so selecting the ValidatorStakeWeightMultiplier value could be more arbitrary than based on cost/profit ratios.

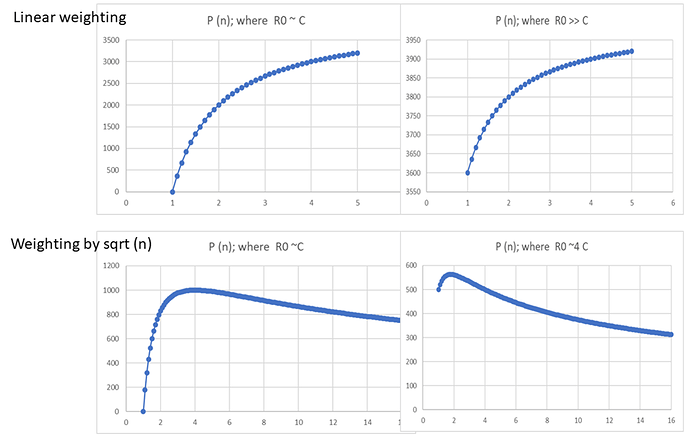

Thanks @ Andy-Liquify for your responses. I spent the night working out the math. Bottom line: linear weighting drives the whole system to the cap which may be a great short-term Band-Aid, but what about when price improves and we have a need to add nodes fast to handle growing relay count? The disincentive to small-staked nodes persists under all price and growth scenarios and is not sensitive to parameter setting. A simple fix would be to go to nonlinear weighting, For example weighting according to sqrt of FLOOR rather than linear with FLOOR is a very simple and elegant tweak that solves most of the issues. (1) weighting by sqrt of FLOOR (or perhaps a variable exponent less than 1) is naturally deflationary and (2) most importantly creates a natural balance between small and large staked nodes that skews toward large-staked nodes during challenging times like we are in where fewer nodes are needed and deftly shifts to favor smaller-staked nodes in economic environments in which node expansion is needed. Is it better to show the math here (i.e., you are open to the suggestion of tweaking this PIP to incorporate a parameter allowing nonlinear weighting if the math proves it is needed) or is it better for me to open a new PIP advocating nonlinear-weighted staking?

Fascinating idea! Yes, do share the math here if you can. I’d like to look into modeling out the economic implications.

Also, feel free to reach out to me in Discord (shane#0809) if there is more you’d like to share that could help with modeling out the economics ![]()

ok, I will post it here then. It will take me bout an hour to finish it up and then I will post

Here is the math I promised on why nonlinear weighting (exponent less than 1) works quite well while linear weighting does not

Current state of affairs:

Let R0 be node rewards under current implementation. For the sake of argument lets assume an average value that happens to be around 40 POKT in today’s environment

Let C be the average daily operating cost of a node priced in POKT; in today’s environment this is very close to R0; i.e., around 40 POKT. Since C is more or less a fixed fiat price, the main sensitivity of C is to the price of POKT. When POKT is $1 C is approx. 5; if POKT were to drop to 5 cents, C may be closer to 100

Let k be the number of nodes a node runner (pool/DAO/whale etc) runs. Let’s assume k large compared to 5 (so that he can consolidate to larger nodes if that were made possible) (i.e., k=100 would be someone with 1.5M POKT running 100 nodes)

Let P be the total payout to the node runner:

P = k*(R0 – C)

which clearly is untenable when C>=R0 as in today’s environment

State of affairs if PIP-22 gets passed as-is (stated in simplified terms):

R = R0 * A * B *min(n,m)

where A is a DOA-controlled parameter (currently suggested to be set to 1/5 or slightly larger),

B is an unknown network multiplier that depends on how effective PIP-22 is in reducing node count,

n is the quantized multiple of 15k POKT that an operator wishes to stake to a node,

and m is a DAO-controlled parameter (currently suggested to be set to 5)

total payout to node runner becomes:

P = k/n * (R-C) = k/n * (R0 * A * B * min (n,m) – C)

The continuation of the math will be easier to show if we take the cap out of the equation and just remember that the cap exists and apply it in the end. Thus, for n<=m:

P = k/n * (R0 * A * B * n – C)

= k * R0 * A * B - k * C/n

A node runner is motivated to maximize their payout and will adjust n accordingly. To find the max payout as a function of n, take the first derivative of P(n) and find where this crosses 0 (if at all)

dP/dn = k*C / (n^2) == 0 which implies n = infinity (but capped of course at m)

Or to show this visually instead of mathematically, this is payout as a function of n for challenging times like now where node reduction is needed compared to times where POKT is flying high and node growth is needed

(See graphic at the end)

Meaning that no matter the attempt by the DAO to tweak parameters, no matter the network effect caused by consolidation and no matter the economic environment or the need to add nodes, node runners will never be able to be incentive to run a node at anything less than the max POKT allowed. Great for times like now where node reductions is warranted – horrible in the long term when node growth is needed

Now compare this to what happens if we weight by the sqrt of n rather than linear with n (ignoring for the moment the possible setting of a cap).

R = R0 * A * B *sqrt(n)

where A,B and n have the same meaning as in the above discussion

P = k/n * (R – C)

= k/n * (R0 * A * B * sqrt(n) – C)

= k * R0 * A * B/sqrt(n) – k * C/n

Again, a node runner is motivated to maximize their payout and will adjust n accordingly. To find the max payout as a function of n, take the first derivative of P(n) and find where this crosses 0 (if at all)

dP/dn = k/(n^2) (C – R0ABsqrt(n)/2) ==0 n for max payout is at n = 4 (C/(R0A*B)^2

For given averages for R0, C and B, the DAO can now easily skew node-runner behavior toward running max-sized nodes, min-sized nodes or anything in between. 0.25 is a suggested initial value for A. This should cause most operators who can, to consolidate toward n=4 (60k POKT staked per node)

The system is self-correcting with respect to the network affects of consolidation (unknown variable B). At the start when few nodes are consolidated node runners are incentivized to consolidate. IF node runners consolidate too strongly (say they start staking 200k POKT per node) B shoots past target and the yield curve adjust to strongly incentivize destaking back down to about 60k POKT per node) and open back up more nodes

This also exhibits correct behavior with respect to economic conditions. Suppose POKT price drops to $0.05 to $0.1, (C>R0) then this incentivizes node runners to consolidate to even greater levels (max payout t around n=7 ~105k POKT staked per node). At a POKT price of $.4 (C<R0) sweet spot drops from n=4 to between 2 and 3). If POKT price jumps up to $2 (C<<R0) because relays are shooting through the roof, sweet spot drops to the n=1 to 2 range.)

Note this example only shows the effect of weighting by sqrt(n). It is better probably to weight by n^p where p is a DAO controlled parameter between 0 and 1 I have to model further, but I think p closer to 2/3 may give more desireable results (in terms of curve steepness etc) than p=1/2, but I will not know until I have a chance to model it.

Thank-you for the details! I think I’m following most of it.

Would you happen to have this in a spreadsheet format where folks like myself can poke around to fully understand it?

Seconded. This whole thread has become PhD level numbers theory.

I’d be glad to share spreadsheet but it won’t let me upload here. Other options?

Otherwise super easy to put in spreadsheet yourself: Something like the following::

| A | B | C | D | E | F | G | |

|---|---|---|---|---|---|---|---|

| 1 | LINEAR WEIGHTING with CAP | ||||||

| 2 | valiable | value | comment | n | R | P (n) | |

| 3 | R0 | 40 | current avg reward | 1 | =$B$3*$B$5*$B$6*MIN($E3,$B$7) | =$B$8/$E3*($F3-$B$4) | |

| 4 | C | 40 | daily infra cost in units of POKT | 1.1 | =$B$3*$B$5*$B$6*MIN($E4,$B$7) | =$B$8/$E4*($F4-$B$4) | |

| 5 | A | 0.2 | DAO parameter to neutralize inflation effects of consoidation | 1.2 | etc | etc | |

| 6 | B | 5 | group-behavior infltionary system response to fewer nodes | 1.3 | etc | etc | |

| 7 | m | 5 | max # 15k units that can be staked to node | 1.4 | etc | etc | |

| 8 | k | 100 | arbitrary number of 15k quanta to be deployed; makes no difference | 1.5 | etc | etc | |

| 9 | 1.6 | etc | etc | ||||

| 10 | P(n) | (output) | total pay a node runner receives in POKT across all nodes after selling off enough POKT to cover infra costs | 1.7 | etc | etc | |

| 11 | 1.8 | etc | etc | ||||

| 12 | 1.9 | etc | etc | ||||

| 13 | NOTE this outputignores any binning/quanitzing effects | 2 | etc | etc | |||

| 14 | 2.1 | etc | etc |

the above would be for linear capped weighting. To do sqrt weighting instead

cell F3 would become "=$B$3*$B$5*$B$6*sqrt($E3) etc

I was redirected to skynet…I added the file there

https://skynetfree.net/JACS6VzGyvJemAeePhHqR8zMPole1_AaxSQr2fhJ_vJttQ

While I will certainly defer the maths to the Phd gigabrains, I’d add to the logic:

-

Working on a optimization curve brings an issue of forward assumptions about optimal POKT to place on each node. I’d bring up the issue of unstaking/restaking and the associated 21 days. If the optimal number of POKT to stake is continuously changing, there is an issue around quickly responding to these changing parameters with the 21 day unstake as is. It would lead to 2nd and 3rd order forward guesses about future pricing and unhappy stakers as they have to unstake if wrong.

-

I have less of an issue about spinning up new nodes. One of the fastest periods of growth was in the early part of the year, when prices were up to 20x current prices. $45k nodes seemed not to be a deterrent to spinning up new nodes, and there are multiple pool services that afford an entry into POKT for an investor of any budget.

-

I do not see stake weighting or increased POKT per node as particularly unfair to smaller stakers. One of the reasons we got to the current situation is that when POKT was offering rewards of 180 pokt/day @ $3, no one cared about supply side optimization of infrastructure. People were happy to pay $300/node/month with higher prices. Therefore, in a higher price environment that this proposal should bring, although larger stakers (75k POKT) will indeed benefit slightly more in reduced infra fees overall, smaller stakers are not unduly encumbered by a slightly higher transfer cost. Its akin to when i transfer $100 of USDT to USDC on Binance Ipay a higher percentage fee than if i was a VIP customer transferring $1,000,000.

-

Understood about optimal operating point changing over time but I don’t think it big concern because (a) their at lest back squarely in being profitable and optimizing over a 30% vs 35% ROI is vastly different than treading the line of becoming unprofitable and (b) once I start consolidating, that part of the curve is not very steep, meaning that if the “sweet spot” sits at 60k POKT/node, I get pretty close to the same bang for the buck if I stake 45k or 75k or 90k. So I don’t see people fretting or trying to change staked amounts every month… just around big macro trends

-

Point taken about pooling service and people shelling out $45k for a node. However, I am looking for the future health of POKT. I would note that (a) there was a huge amount of liquidity in 2021 from cashing in on 20x gains in other tokens that provided a lot of this seed money. When POKT next faces the need to grow and to grow fast in response to app demand, it is very likely to be in much less “easy money” environment. (b) consolidating node architecture of POKT into the hands of a few big service providers run by a few big pools is antithetical to the decentralized, diversified node deployment POKT ultimately needs.

-

Increasing POKT/node is not unfair. My original point was that if you are going to embed an equation into the code that de facto forces everyone’s hand to stake 75k, then cut the obfuscation and simply vote to raise the min staked to 75k. At least then the smaller players who can’t consolidate will get out of the way immediately instead of enduring a month of daily POKT dropping to 10 or so (due to ValidatorStakeWeightMultiplier being set to unrealistically high value) before quitting due to gross unprofitability.

Here’s the bottom line (hopefully non-mathematical) of what I am trying to communicate:

In the current proposal there is all gain and no pain to consolidating as much a possible no matter the circumstance. No amount of parameter tweaking can induce someone to stake less than the maximum they can. The node runner paying $5 ot $6 / day to a service provider and the tech-savvy runner who owns, maintains and optimizes their own equipment who pays only 60 cents / day in electricity are both alike pushed toward consolidating. With non-linear weighting (that is when the reward multiplier is less than the amount consolidated), the gain achieved by reducing infra costs strikes a natural balance with the pain of the daily rewards not being as high compared to if I didn’t consolidate. So now a node runner with 60k POKT who uses a service provider will naturally consolidate onto a single node. His 160 POKT he used to get awarded daily drops to 80 after consolidating (due to the sqrt weighting), but he is happy because he only has to sell off half instead of all of his award to pay the daily service provider fee and he remains squarely profitable. The tech runner with 60k POKT, on the other hands keeps all 4 of his machines running, pulling in 160 POKT per day since they only have to sell off 20 POKT per day to cover their electricity cost.

I am not advocating to abandon PIP-22. I am advocating to add a DAO-controlled parameter ValidatorStakeFloorMultiplierExponent with valid range (0,1] and initial value 0.5 as follows:

(flooredStake/ValidatorStakeFloorMultiplier)^ValidatorStakeFloorMultiplierExponent

Want to start out with linear weighting: simply set value of ValidatorStakeFloorMultiplierExponent to 1; at least the knob is there for when the the DAO needs it if and when there is need to turn on lots of nodes as quickly as possible or encourage current consolidators to separate back out into smaller-staked nodes

On a completely separate note, I strongly recommend to set the day-one value of ValidatorStakeWeightMultiplier to 1, not to 5 or 4.5 or whatever is being proposed. Then tweak this parameter over the first month in RESPONSE to the unbonding that takes place, not in ANTICIPATION of the unbonding. This provides a more seamless transition period than setting it immediately to anticipated final value.

Why? If you get this parameter wrong on the low side (day-one value of 1) the worst that can happen is a momentary spike in rewards as you play catch-up to unbonding. So let’s say that day-one half of all nodes unbond as they scramble to implement consolidation and this increasing over next few days until 80% of all current nodes have unbonded. First day following avg rewards/node jumps to 80 so DAO adjusts ValidatorStakeWeightMultiplier to 2.0. A few days of 80 or 60 or 55 POKT/node is not enough to cause a big inflationary event that puts downward price pressure on POKT.

On the other hand, setting day-one value to 5 or 4.6 or whatever in anticipation of how many nodes will ultimately unbond could trigger small node runners to unnecessarily quit their node especially if unbonding by the bigger players takes longer than expected… as they see their reward immediately plunge to sub 10 POKT/day and induce them to give up their node for good without sticking around for rewards to recover back toward 40/day.

Besides I am not sure this parameter is even needed. Any network effects of unbonding are already fed into the feedback loop of RelaysToTokensMultiplier which will adjust to the new reality of PIP-22 to keep total rewards in line. The only value of this parameter is really to try to smooth out the effects of a massive unbonding event during the first month since the already existing feedback loop operates on a month-or-longer time scale

Thanks @Andy-Liquify for kickstarting this thread as well as everyone else’s input, including @msa6867 on this proposal. It’s really impressive. Although I need to spend more time reviewing the math before giving my firm view either way. However, I’m largely in favour of weighted staking as the most viable approach to increasing network security. And I’m excited to see this be implemented.

In the meantime, I would like to challenge one small, but consequential assertion:

Pocket is massively overprovisioned in terms of its node capacity, hence the push for lowering the cost of the network via the light client work, etc. My understanding is that about 1,000 nodes could easily handle 1-2B relays per day. As relays start to ramp up further, node runners may have to spin up more full nodes per blockchain that they service, but they shouldn’t need to scale up any additional Pocket nodes to do so. And the economics shouldn’t incentivise this either. So this point is irrelevant to this conversation thread IMO, at least from a systems perspective, as opposed to a socio-political question around the cost to run a Pocket node in the future, and who gets to access yield from such.

Thanks @Dermot. There’s something in the nuance of what you are saying here that seems important but I’m not grasping yet. Could you explain the difference between “spin up more full nodes per blockchain” and “scale up additional Pocket nodes”. Thanks!

Hi all,

I’ve just pushed an update to the proposal as it’s being co-opted by the core team.

Kudos to @Andy-Liquify for a stroke of genius that looks to be making stake-weighted servicing an easy solution.

Kudos also to @msa6867 for the extremely insightful analysis (are you an economist by chance?). I’ve added the exponent parameter at your recommendation. I hope to see your input on the companion proposal that will be needed to specify the values of these new parameters.

@msa6867 As each Pocket node is merely a front to increase the chance of selection to do work, spinning up new pocket nodes is not the blocker when relays start to ramp up. Instead, it is ensuring that your independent blockchain nodes are not getting overloaded (eg spin up more), as well as other more technical points about node running that are outside of my expertise.

For example, my understanding is that 100s of pocket nodes - and potentially much more - send work to only 1 node for each blockchain they support, eg 1 for ETH, Harmony, Polygon, Gnosis, etc. (My numbers are definitely off here).

While this isn’t the most urgent priority, maybe the likes of @luyzdeleon @Andrew @BenVan or @Andy-Liquify could provide some colour on the current ratio of full blockchain nodes to pocket nodes? There have been lots of comments across the forum about the cost to run Pocket Network from a systems perspective, but no specific data points on how the incentives to scale nodes horizontally create unnecessary waste without increasing - at least on anything close to a proportional basis - Pocket’s network’s overall capacity to service relays.

I m a physicist and former aerospace engineer. But economics is where I mainly apply that training these days. Is the companion proposal in the works already or is that later after this passes? While my preferred exponent value is 0.5, it think it may be in the best interest of the community to set it to 1 on day one (keep it at linear weighting) since that is the most understood behavior. I would like to see nonlinear weighting get greater socialization and buy-in from the community before being executed.

I’m working my way through the 20/6/22 updates and will reply here comments as I go:

not that it matters too much (since how it actually gets coded is what matters), but this formula is still wrong: it still has one too many terms of ValidatorStakeFloorMultiplier and the exponent is in the wrong place (it should not get applied to ValidatorStakeWeightMultiplier). I suggest that what is meant is:

reward = NUM_RELAYS * RelaysToTokensMultiplier * (FLOOR/ValidatorStakeFloorMultiplier)^(ValidatorStakeFloorMultiplierExponent)

/( ValidatorStakeWeightMultiplier)